Part-of-Speech Tagging is the process of determining the grammatical role of each word in a sentence, whether it is a noun, verb, adjective, or other part of speech. In other words, it is a way to teach a computer to understand the structure of language as humans do. In modern NLP and AI in business applications, part-of-speech tagging is a fundamental basis for text analysis, machine translation, chatbots, and sentiment analysis systems.

For example, take the sentence: “The quick brown fox jumps over the lazy dog.”

After POS tagging in NLP, the words will be tagged as follows:

| Word | POS Tag |

| The | Determiner |

| quick | Adjective |

| brown | Adjective |

| fox | Noun |

| jumps | Verb |

| over | Preposition |

| the | Determiner |

| lazy | Adjective |

| dog | Noun |

This kind of analysis allows models to not only see words, but also understand their functions and relationships within a sentence. This is a key step for accurate language processing and building intelligent NLP systems.

What is POS Tagging

Part-of-Speech Tagging is a process in which each word in a text is assigned its grammatical role: noun, verb, adjective, adverb, and others. But to understand why this is important, we need to look at the whole process in more detail.

The first stage is tokenization. The text is broken down into separate elements called tokens. These can be words, numbers, punctuation marks, or special characters. For example, the sentence:

“I can book a table for dinner.” is broken down into tokens: [“I”, “can”, “book”, “a”, “table”, “for”, “dinner”, “.”].

Next comes tagging itself — each token is assigned a part of speech. There are different methods: grammar rules, statistical models, and machine learning algorithms. After that, the computer generates a structured result suitable for analysis, search, or machine translation. For the above sentence, the result might look like this:

| Word | POS Tag |

| I | Pronoun |

| can | Modal Verb |

| book | Verb |

| a | Determiner |

| table | Noun |

| for | Preposition |

| dinner | Noun |

| . | Punctuation |

Why is this important for machines? Computers do not understand language like humans do. A word without context is just a set of symbols. In order for AI to perform translation, search, text analysis, or interact with users via chatbots, it must understand the functions of words and their relationships. POS tagging in NLP allows machines to distinguish between actions, objects, descriptions, and the relationships between them, which significantly improves the accuracy of models.

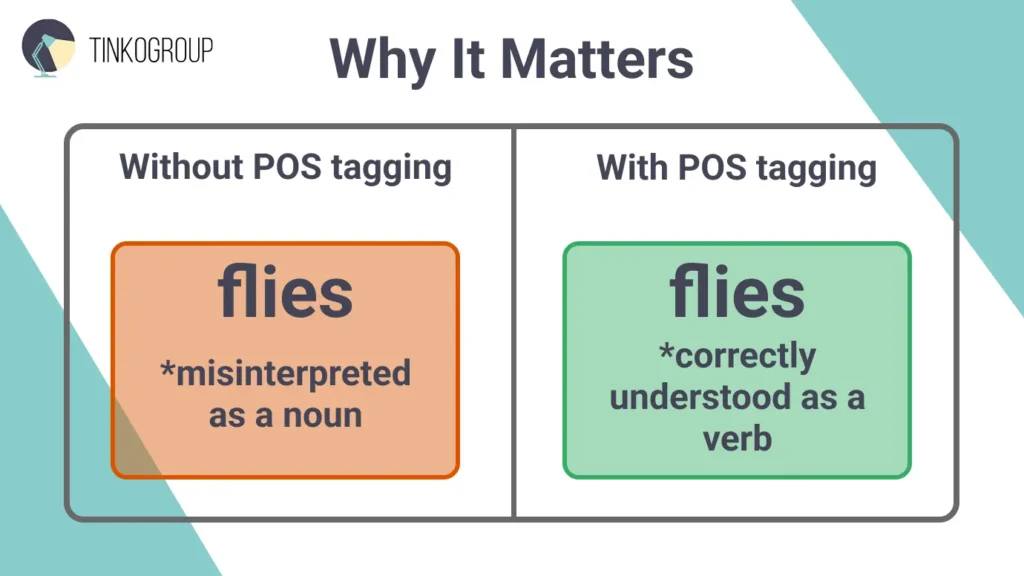

The complexity arises with ambitious and ambiguous words. For example:

- Book: can be a noun (“I read a book”) or a verb (“Please book a ticket”).

- Flies: can be a noun (“The flies are annoying”) or a verb (“She flies to Paris every month”).

- Light: adjective, noun, or verb depending on the context (“The light is bright,” “Turn on the light,” “She will light the candle”).

Without NLP part-of-speech tagging, the model will not be able to distinguish between these meanings, leading to errors in machine translation, tone analysis, or even voice assistants. For example, if AI does not distinguish between a verb and a noun, it may misinterpret a voice assistant command and give an incorrect result.

Thus, POS tagging machine learning provides accuracy and contextual understanding, allowing models to correctly interpret words, their functions, and connections in a sentence. This is a basic but extremely important step for all NLP projects, whether it’s AI in business applications, chatbots, search engines, or text sentiment analysis.

Importance of POS Tagging in NLP

Part-of-Speech Tagging plays a key role in modern NLP and AI systems. Part-of-speech tagging helps models understand language structure and distinguish between objects, actions, and descriptions, making them more accurate and context-oriented. This is especially important for businesses and startups: without high-quality POS tagging in NLP, most applications do not work properly, producing incorrect results or creating inconveniences for users.

Let’s take a closer look at why POS tagging is necessary and how it affects different areas of AI and NLP application.

Why It Matters in

Part-of-speech tagging plays a key role in a wide range of NLP applications. At first glance, it may seem like a technical detail that is easy to overlook, but in practice, part-of-speech tagging determines how accurately and contextually a system can interpret text. This linguistic structure becomes especially important for downstream NLP tasks such as text classification, where models must correctly understand word roles to assign meaning, intent, or category. From machine translation to search engines and voice assistants, an accurate understanding of the grammatical role of words helps models distinguish between actions, objects, and descriptions, ensuring accuracy and reliability.

In this section, we will examine how POS tagging in NLP affects key areas: machine translation, search engines, chatbots, voice assistants, and text sentiment analysis. Understanding these applications shows why competent part-of-speech tagging is not just a technical step, but a strategic tool for any AI project.

- Machine translation. Translating text between languages requires more than just replacing words; it requires understanding the structure of the sentence. Words are often ambiguous, and word order and grammar differ between languages. For example, the English sentence “I can book a table” without part-of-speech tagging may be translated incorrectly: the system will not understand that “book” is a verb here. With POS tagging machine learning, translation becomes accurate, grammatically correct, and contextually meaningful.

- Search engines. For search engines, it is important to interpret user queries correctly. If a user searches for “apple recipes,” tagging words helps to understand that apple is a noun, not a company brand, allowing relevant results to be returned. Identify parts of speech allows you to classify queries, improve ranking, and increase user satisfaction.

- Chatbots and voice assistants. Chatbots and voice assistants, such as Siri, Alexa, or Google Assistant, depend on accurate language comprehension. Different word forms and ambiguity can cause errors. For example, the command “Book a table” requires recognition of the verb book. NLP part-of-speech tagging helps the system correctly understand the query and perform the action, rather than perceiving the command as an object.

- Sentiment analysis / text analytics. For sentiment analysis, it is important to understand what refers to a subject and what expresses an action or emotion. In user reviews, words like “love” can be either a verb or a noun, and correct interpretation helps identify the exact attitude towards the product. POS tagging tools provide models with context and accuracy, which improves business analytics, forecasting, and automated recommendations.

How it Improves Model Accuracy and Context Understanding

Part-of-speech tagging not only structures text, but also allows models to distinguish between the roles of words, see the connections between them, and predict the meaning of sentences. This makes AI systems more “sensitive” to context: they can distinguish between tone, intent, and the grammatical functions of words.

For example, the sentence “The bank will close soon” could refer to a financial institution or the bank of a river. POS tagging in NLP allows us to determine the context: if bank follows an article and a verb, it most likely refers to a bank as an organization.

For machine learning in NLP, correct tagging improves model accuracy, reduces errors, and provides better results in business applications. Models become capable of making inferences not only based on words, but also based on their roles and relationships, which is critical for complex NLP systems.

Types of POS Tagging in NLP

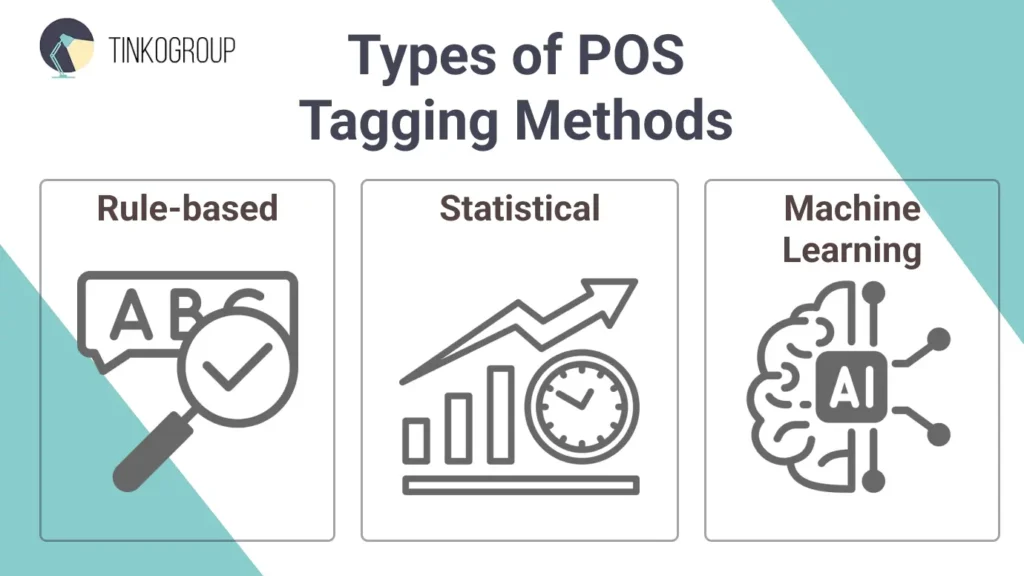

Part-of-Speech Tagging can be performed in different ways, each with its own characteristics, strengths, and limitations. The choice of method depends on the task, data volume, accuracy requirements, and project resources.

Rule-Based Methods

Rules and dictionaries are one of the first approaches to POS tagging machine learning. It is based on the grammatical rules of the language and ready-made dictionaries of parts of speech. For example, the system may know that the word “the” is almost always an article, and “ing” at the end of a word often indicates a verb form (gerund).

Example:

The word “run” in the dictionary can have the tags: Noun, Verb. The system selects the tag according to the rule based on the neighboring words: “I run daily” → Verb, “I went for a run” → Noun.

Pros:

- Does not require large amounts of data for training.

- Interpretability: it is easy to understand why the system chose a particular tag.

Cons:

- Difficult to scale for large and diverse texts.

- Cannot handle ambiguous words and contexts outside of predefined rules.

- Requires constant updating of dictionaries and rules when adding new words or slang.

Statistical Methods

Statistical approaches use probabilistic models to select tags based on context. The most well-known methods are Hidden Markov Models (HMM) and Conditional Random Fields (CRF). They analyze the frequency of tag and word combinations in annotated corpora and select the most likely tag for each word.

Example:

The word “book” occurs as a noun in 70% of cases and as a verb in 30%. The model selects the correct meaning based on the context of the sentence.

Pros:

- They take context into account.

- They can work with ambiguous words and reduce the number of errors compared to purely rule-based methods.

Cons:

- Requires a large annotated corpus for training.

- Sometimes it is difficult to explain why the model chose a particular tag (less interpretability).

Machine Learning / Deep Learning

Modern methods are based on neural networks and allow models to understand context at a deep level. RNN and BiLSTM analyze word sequences, taking into account previous and subsequent tokens, while Transformers and BERT-type models use an attention mechanism that allows them to take into account the entire context of a sentence and even a paragraph.

Example:

In the sentence “I saw the man with the telescope,” the neural network understands that “with the telescope” refers to the action, not the person, and correctly tags the words.

Pros:

- High accuracy, especially on complex texts.

- Ability to handle ambiguity, long contexts, and new words.

- Scales well for big data and multilingual projects.

Cons:

- Requires a lot of computing resources.

- Training data must be tagged with high quality (POS tagging tools, NLP part-of-speech tagging).

- More difficult to interpret the model’s decision.

Pros and Cons of Each Approach

| Approach | Pros | Cons |

| Rule-based | Simple, interpretable, does not require large amounts of data | Limited by rules, poor at handling context and ambiguity |

| Statistical (HMM, CRF) | Takes context into account, works with ambiguous words | Requires large amounts of marked-up data, less interpretable |

| ML / Deep Learning | High accuracy, scalability, takes long context into account | Requires resources and large amounts of high-quality data; more difficult to explain decisions |

In real-world projects, approaches are often combined: rules help beginners and small projects, while statistics and neural networks are used for high-precision POS tagging in productive AI systems.

POS Tagging in NLP: Real-World Applications

Part-of-Speech Tagging is not just an academic concept. In practice, it underpins a multitude of tools and services that millions of people and businesses use every day. From machine translation to voice assistants and automatic content moderation, understanding the functions of words in a sentence is critical to the accuracy and effectiveness of AI models.

Google Translate

In machine translation systems, accurate part-of-speech tagging allows algorithms to correctly interpret context. For example, the English word “book” can be a verb or a noun. Without NLP part-of-speach tagging, Google Translate would not be able to correctly translate the sentence “I will book a ticket” into German, where the verb requires a different form. Word tagging helps models choose the correct grammatical forms and construct meaningful translations, making the service more reliable and accurate.

Grammarly

Grammar and style checking services such as Grammarly rely on POS tagging tools to analyze sentences. Models recognize where verbs, nouns, adjectives, and adverbs are, which allows them to identify errors in agreement, incorrect word forms, and word order violations. For example, in the sentence “She go to school every day,” the system recognizes that go should be a third-person verb and automatically suggests a correction to “goes.”

Siri / Alexa / Google Assistant

Voice assistants and chatbots use POS tagging machine learning to correctly interpret user commands. The difference between a verb and a noun is critical here: the command “Book a table” must be recognized as an action, not an object. Accurate part-of-speech tagging allows AI to correctly execute requests, whether it’s booking a table, playing music, or setting a reminder.

Business Chatbots

Business chatbots for customer support and sales also depend on accurate tagging. With NLP part-of-speech tagging, the system understands the meaning of the request, distinguishes between objects and actions, which improves the accuracy of responses and the quality of interaction. For example, the request “I want to cancel my order” is correctly interpreted as an order cancellation action, not just a mention of the word “order.” This improves the user experience and reduces the load on live operators.

Content Moderation

Content moderation platforms use POS tagging in NLP to distinguish between insults, spam, or potentially dangerous content. Accurate part-of-speech tagging helps models distinguish between objects and actions, recognize context, and prevent false positives. For example, the word “kill” can be a verb in a threat (“I will kill you”) or a noun in a safe context (“Kill the weeds in the garden”). Without tagging, the model will not be able to correctly assess the meaning of the message.

Part of Speech Tagging Example

To understand how Part-of-Speech Tagging works, let’s look at a simple example. Part-of-speech tagging allows a machine to see each word and its grammatical role, and then use that information to analyze, translate, or generate text.

Simple Breakdown of a Sentence with Tags

Let’s take the sentence: “The quick brown fox jumps over the lazy dog.” Each word has its own function: the article indicates a noun, the adjectives describe the characteristics of the object, and the verb indicates an action. Thanks to POS tagging machine learning, the model can understand the structure of the sentence and correctly interpret its meaning.

Table: Word → POS tag

| Word | POS Tag |

| The | Determiner |

| quick | Adjective |

| brown | Adjective |

| fox | Noun |

| jumps | Verb |

| over | Preposition |

| the | Determiner |

| lazy | Adjective |

| dog | Noun |

This table shows how NLP part-of-speech tagging structures text and provides context for further processing.

Python Code Snippet

Below is an example in Python using the spaCy library, demonstrating how to quickly tag parts of speech:

import spacy

# Download English model

nlp = spacy.load(“en_core_web_sm”)

# Text for analysis

sentence = “The quick brown fox jumps over the lazy dog.”

# Text processing

doc = nlp(sentence)

# Output of the word and its POS tag

for token in doc:

print(token.text, token.pos_)

Example SQL output:

The DET

quick ADJ

brown ADJ

fox NOUN

jumps VERB

over ADP

the DET

lazy ADJ

dog NOUN

. PUNCT

This approach allows for automatic and rapid identification of parts of speech in a sentence, ensuring accurate text analysis and contextual understanding for NLP models.

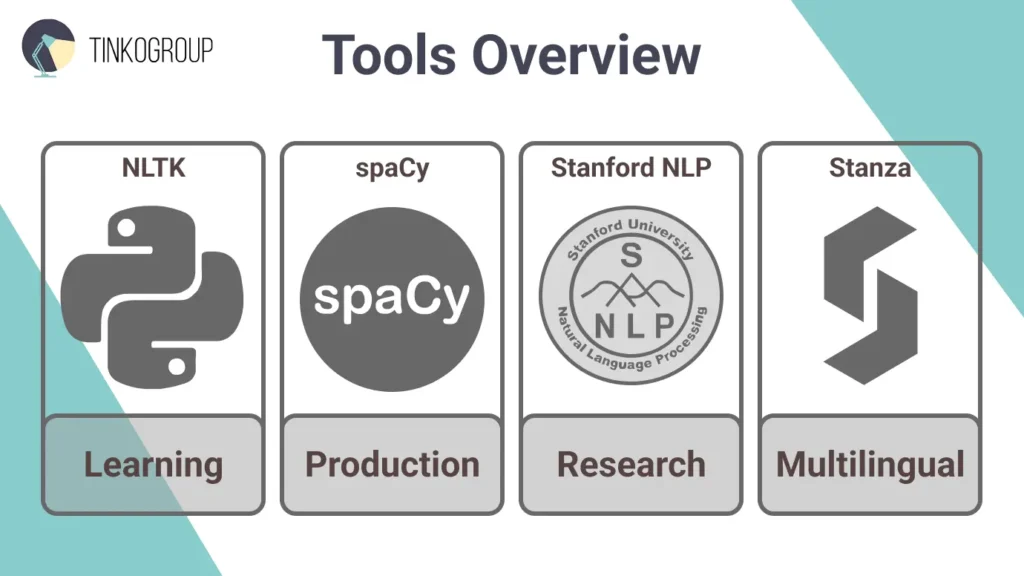

POS Tagging Tools

Today, there are many ready-made libraries for working with Part-of-Speech Tagging, each of which solves its own tasks — from academic experiments to industrial solutions. The choice of tool depends on the project’s goals, language, and requirements for speed and quality of analysis. Let’s take a look at the most popular libraries that are actively used in the world of machine learning in NLP and corporate AI products.

Overview of Popular Tools and Libraries

To effectively implement part-of-speech tagging, you don’t need to build everything from scratch. The NLP community has already developed powerful tools that automate most of the work, from tokenization to accurate grammatical tagging. These libraries allow you to quickly identify parts of speech in a sentence, experiment with models, and integrate ready-made solutions into real products.

Each of them was created for different purposes: some are ideal for training and research, while others are better suited for industrial systems where scale and speed are important. Below, we will look at the most popular POS tagging tools used in academia, startups, and large AI companies around the world.

NLTK

Natural Language Toolkit (NLTK) is one of the oldest and most well-known libraries for natural language processing. It is ideal for training, experiments, and basic NLP tasks. NLTK has built-in tokenizers, POS taggers, and lexical resources such as WordNet. However, its speed and performance are limited — in real business applications, it is more often used for prototypes and academic projects. Example: NLTK allows you to identify parts of speech with just a few lines of code:

import nltk

nltk.download(‘averaged_perceptron_tagger’)

nltk.download(‘punkt’)

sentence = nltk.word_tokenize(“AI is transforming the business world.”)

print(nltk.pos_tag(sentence))

spaCy

spaCy is the industry standard in POS tagging tools. It is optimized for speed and scale, which is why it is often used in real-world products such as chatbots, search engines, and content systems.

This library supports models for dozens of languages and easily integrates with machine learning frameworks.

Why choose spaCy:

- high speed processing of large volumes of text;

- accuracy of POS tags thanks to trained neural network models;

- integration with deep learning libraries (PyTorch, TensorFlow).

Stanford NLP

Stanford CoreNLP is a legendary library from Stanford, built on Java. It is often used for academic research and projects that require high accuracy and explainability of models.

Its POS tagger is based on Conditional Random Fields (CRF) and provides deep grammatical analysis.

Although Stanford NLP is less convenient for integration into Python, many use it via REST API or wrappers such as stanza.

Stanza

Stanza is a modern continuation of Stanford NLP, developed by the same researchers, but with native support for Python and PyTorch.

It uses deep learning (BiLSTM, Transformer) and provides the highest accuracy in POS tagging. Stanza is especially useful for multilingual projects and academic research where morphological accuracy is important.

Example: Stanza can automatically identify parts of speech in a sentence using models trained on dozens of languages, including rare ones.

When to Use which Tool

- NLTK is the best choice for learning, courses, and experiments. If you are an NLP student or want to quickly understand how part of speech tagging works, start with NLTK.

- spaCy is ideal for AI in business applications: fast, stable, and scalable. It is used for chatbots, analytics systems, recommendations, and any production tasks.

- Stanford NLP is suitable for scientific research and tasks where the explainability of algorithms is important, not just speed.

- Stanza — the choice for advanced deep learning projects that require high accuracy and support for many languages.

Thus, the choice of tool depends on the balance between speed, accuracy, and context of use. In real products, a hybrid approach is often used: for example, preprocessing in spaCy and detailed analysis in Stanza.

POS Tagging and Machine Learning

Modern language processing systems are impossible without machine learning. When we talk about Part-of-Speech Tagging, it is machine learning algorithms in NLP that allow models to see patterns in text, understand context, and grasp the grammatical structure of sentences. However, at the heart of any model lies one thing: high-quality annotated data. Without accurate annotation, even the most complex algorithm will not be able to learn to distinguish parts of speech, and therefore understand language.

How Annotated Data Enables POS Tagging Models

Each machine learning POS tagging model undergoes training on a large set of texts, where each word is already tagged with its part of speech. These tagged sentences become training material for the algorithm — the more data there is and the higher its quality, the more accurate the model will be.

For example, if the system is trained on millions of sentences where the words “run” and “book” appear in different contexts (as a verb and as a noun), it gradually learns to identify parts of speech based on context, rather than just on a dictionary.

Thus, annotated data is not just a set of texts, but the foundation on which the model’s ability to understand language like a human being is built.

Why Quality Annotation Improves Accuracy

The accuracy of POS tagging in NLP directly depends on the quality of the annotation. If there are errors in the data — words are tagged incorrectly or tags are inconsistent — the model will begin to reproduce these errors in its predictions.

High-quality annotation is achieved when there are clear annotation guidelines, and the annotation team is trained and uses multi-level quality assurance (annotation quality assurance). Poorly labeled datasets can lead to significant issues in production. To avoid common pitfalls, check out our guide on the Top 7 Data Labeling Mistakes That Hurt Your Machine Learning Model Performance.

For example, in English, a simple confusion between a gerund and an active verb can change the meaning of a phrase. In languages with rich morphology (German, Russian, Finnish), such errors increase significantly if there are no clear rules and QA procedures.

Good annotated data not only improves the accuracy of the POS tagger, but also reduces the need for model retraining, cuts down on refinement costs, and increases the stability of results on new data.

Reliable Data Services Delivered By Experts

We help you scale faster by doing the data work right - the first time

Connection to Data Annotation Providers

In practice, creating high-quality datasets for NLP part-of-speech tagging requires experience, methodology, and a team capable of working with large volumes of text. This is where professional data annotation outsourcing companies such as Tinkogroup come into play.

Tinkogroup helps businesses and AI teams build reliable annotation pipelines — from training data annotation preparation to multi-level quality control. Their experts create scalable processes for custom data tagging and POS marking, allowing customers to launch projects faster and get accurate, training-ready models.

By combining human expertise and automation (human-in-the-loop), Tinkogroup ensures continuous data improvement, helping clients develop AI-based products in business applications — from chatbots to content analysis systems.

Challenges and Limitations

Despite impressive progress in part-of-speech tagging, even the most advanced models face limitations. Language is not just a set of rules, but a living, changing system where context, culture, and intonation play a huge role. Machines still struggle to grasp all the nuances of human speech, especially when it comes to ambiguity, informal expressions, or languages with complex grammar.

Ambiguity

One of the main challenges for POS tagging in NLP is ambiguity. In English (and other languages), many words can play different roles depending on the context.

For example, the word “book” can mean both a noun (“I read a book”) and a verb (“Please book a ticket”).

Or “flies” can be a verb (“Time flies fast”) or a noun (“Flies buzz around”).

For humans, the meaning is obvious from the context, but the model has to figure it out based on probabilities and past examples. Even modern architectures like Transformers and BERT make mistakes if the context is too short or ambiguous. This is why it’s crucial to understand how annotation bias builds unfair AI from the ground up and how to mitigate it during the tagging process.

Slang, Abbreviations, New Words

Modern language is constantly changing. Every day, dozens of new expressions, memes, and abbreviations appear on social networks and messengers. This is a real puzzle for POS taggers.

Words like “LOL,” “DM,” “vlog,” or “AI-powered” are not always found in dictionaries, which means that the model may misclassify them. Even if the system is trained on huge corpora, the emergence of new words requires regular data updates and model retraining.

In addition, slang often violates grammatical norms. Phrases like “That’s lit!” or “He kinda sus” create confusion: models don’t know how to tag “lit” or “sus” because they can behave like adjectives but don’t fit into standard grammatical rules.

As a result, POS tagging becomes not just a classification task, but a task of cultural adaptation — the model must understand a language that lives and evolves alongside society.

Languages with Free Word Order and Complex Morphology

While the sentence structure in English or Spanish is relatively stable, languages such as Russian, Finnish, Hungarian, or Turkish pose real challenges. In these languages, word order can change without losing meaning, and endings carry the main grammatical load.

For example, in Russian, the phrase “The boy sees the dog” can be rearranged as “The dog is seen by the boy” — the meaning is the same, but the order is different. This is a challenge for a POS tagger: it must analyze endings, not just word position.

In addition, such languages have dozens of declensions, conjugations, and forms, which dramatically increases the number of unique word forms. For NLP part-of-speech tagging, this means the need for large corpora and accurate morphological annotation.

Even modern neural network models trained on millions of sentences can make mistakes if they lack contextual clues. Therefore, for languages with complex morphology, the quality of training data annotation and the availability of expert linguists who help models understand the structure of the language are especially important.

Conclusion

Part-of-Speech Tagging is a fundamental element of modern Natural Language Processing. It helps models understand language structure, grasp the meaning of phrases, and distinguish between the meanings of words depending on context. Without accurate POS tagging, it is impossible to build high-quality machine translation systems, voice assistants, chatbots, or search algorithms.

POS tagging is not just markup, but a process that combines linguistics and machine learning, enabling models to understand human speech. It enables deep text analysis, communication automation, and meaning extraction from data.

However, the key to the success of such systems is high-quality annotated data. The more accurate and in-depth the markup, the better the model understands the language and the higher its performance. This is where an experienced team plays a crucial role.

Tinkogroup helps companies build scalable, accurate, and flexible data annotation pipelines for POS Tagging, NER, Sentiment Analysis, and other NLP tasks. We combine trained teams of linguists, modern tools, and rigorous quality processes to provide our clients with reliable datasets for training their models.

If you want to improve the accuracy of your NLP models and build a sustainable annotation infrastructure, learn more on our Data Annotation Services page.

What is the main purpose of POS tagging in NLP?

The main goal of POS tagging is to identify the grammatical role of each word (noun, verb, adjective, etc.) in a sentence. This allows AI models to understand the linguistic structure, resolve ambiguity, and accurately process the relationship between words for tasks like translation or sentiment analysis.

How does POS tagging improve machine learning model accuracy?

By assigning specific tags to words, POS tagging provides essential context that simple text analysis lacks. This prevents errors in interpreting words with multiple meanings (e.g., “book” as a noun vs. “book” as a verb), leading to more precise and reliable results in chatbots, search engines, and voice assistants.

Which tool is best for POS tagging in a production environment?

While NLTK is excellent for learning and prototyping, spaCy is considered the industry standard for production due to its speed, scalability, and pre-trained neural network models. For projects requiring extreme morphological precision across multiple languages, Stanza is often the preferred choice.