In the artificial intelligence ecosystem, there is a subtle but crucial factor for success: the people who create meaning from data.

Models may be mathematically flawless, tools may be powerful, and servers may be fast, but without accurate and thoughtful annotation, this entire technological arsenal becomes useless noise.

Many companies understand that data quality is the fuel for machine learning. But few think about who exactly prepares this fuel and how. That is why the question of how to choose a data annotator correctly becomes not just a technical one, but a strategic one.

Professionals working in the field of data annotation joke: “Well-annotated data is like a well-translated book. If the translator doesn’t understand the meaning, the reader loses everything.” That’s why behind every successful AI/ML project there is not only an algorithm, but also a team of people who can see what the machine cannot: context, exceptions, meaning.

This article shows how to select annotators, build a team, and maintain quality on the path from pilot project to scaling, while balancing speed, accuracy, and cost.

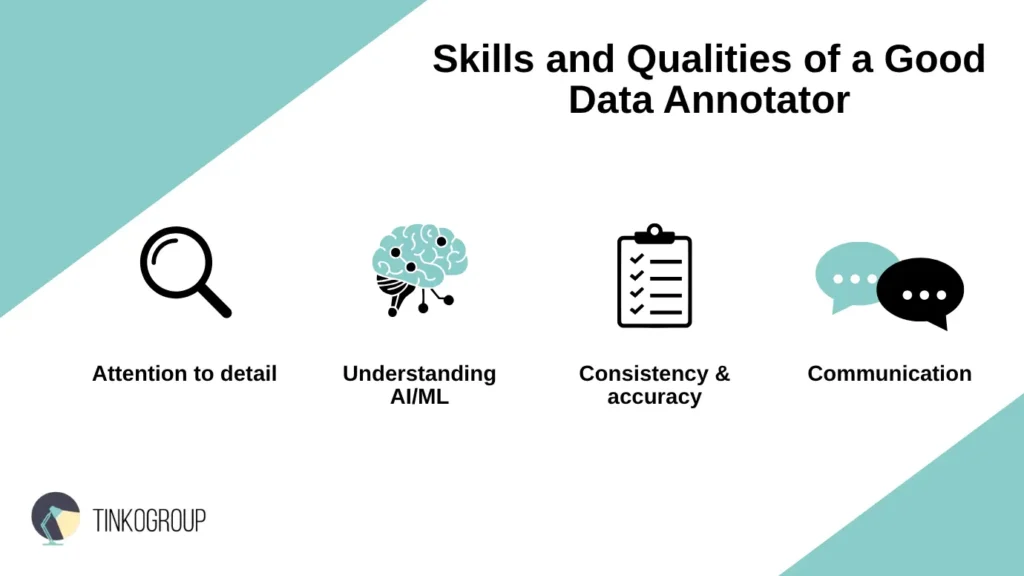

Skills and Qualities of a Good Data Annotator

Machine learning laboratories often talk about “data,” “metrics,” and “models.”But hidden within any dataset is human work. To understand who to hire and how to build processes, you need to know what data annotation skills distinguish a strong specialist from a mediocre one.

- Attention to microdetails. An annotator works at the level of pixels, phrases, and tones. A single incorrectly marked object can distort the model’s training results. The best specialists are able to notice the slightest deviations—just as a sound engineer distinguishes microphone noise in a symphony.

- Basic understanding of the AI/ML context. Annotation should not be mechanical. It is important for a specialist to understand why they are labeling this data and how it affects the model’s behavior. Only then does annotation cease to be a routine task and become part of the research process.

- Consistency and accuracy. The machine learns from patterns, and if a person is inconsistent, the model will make mistakes. Good annotators develop internal discipline: labeling a thousand images identically is an art in itself.

- Communication skills. Annotators often work in different countries and time zones. That is why communication is not a formality, but part of quality. A mistake can be corrected, but a misunderstanding cannot. Experienced professionals know how to give feedback, discuss questionable cases, and are not afraid to admit inaccuracies.

How to Train Data Annotators

When a new team of annotators is formed in a company, the first temptation is to simply give instructions and get to work.But this is precisely when the foundation for future mistakes is laid. Understanding how to train data annotators determines not only the quality of the data, but also the stability of the entire ML system.

Onboarding & Annotation Guidelines

Good training starts not with technical tools, but with context. A new annotator must understand why the project needs annotation: what tasks the model solves, what is considered a “success,” and what is considered “noise.”

Companies that pay attention to the data annotation training process start with a detailed onboarding course:

- an overview of the domain (e.g., medicine, retail, car navigation);

- visual examples of typical and controversial cases;

- clear Annotation Guidelines with illustrations and counterexamples.

Best practices include an “interactive onboarding” format, where annotators complete a mini-quest: they mark real data fragments, receive automatic feedback, and see where they went wrong.

This approach not only speeds up training, but also builds confidence and a sense of personal responsibility for data accuracy.

Using Training Datasets for Practice

After theory comes practice — just like a pilot before their first flight.

Experienced project managers always set aside a separate training dataset where you can experiment without fear of damaging the working data.

At this stage, it is important to show what “quality annotation” is:

- how to build tag logic;

- how to avoid subjective decisions;

- how to distinguish between complex or controversial cases.

Many companies implement a “pair review” system — annotators check each other’s work. This is not control, but an exchange of experience. By observing other people’s mistakes, a person quickly understands how to avoid their own.

QA Processes: Peer Review, Consensus Scoring

Quality control is not a final stage, but a continuous cycle. If QA only appears “at the end of the project,” then quality has already been lost somewhere. Market leaders build a system where each annotation goes through several levels of verification:

- Peer review — colleagues evaluate each other’s work, forming a culture of internal trust.

- Consensus scoring — an algorithm compares the markups of different specialists and identifies discrepancies.

- Feedback loop — identified errors are not simply recorded, but turned into training cases.

A well-structured QA does not punish, but develops. Teams where annotators feel involved in the process show up to a 30% increase in accuracy without increasing the budget.

Continuous Learning and Upskilling

In the world of AI, learning never ends. Today, the team labels images for retail analytics, tomorrow — medical images, the day after tomorrow — data from drones. Each new domain requires new knowledge. Companies that invest in continuous learning win in the long run. Regular guideline updates, workshops on new tools, and analysis of edge cases all turn annotators into professionals, not just executors. One of Tinkogroup’s leading data project managers notes: “We realized that an annotator who learns is an asset, not an expense. They grow along with the model.” That is why modern training is not a week-long course, but a constant dialogue between people and technology.

Choosing the Right People for the Job

When a data annotation project moves beyond the testing phase, managers are faced with a key question: who to hire and how to build a sustainable team?

A mistake at this stage can cost not only money, but also trust in the model. After all, data is its perception of the world, and annotators are the eyes and hands that shape that perception. That is why understanding the factors to consider when choosing a data annotator becomes crucial: where to look for people, how to check their quality, and why team dynamics are sometimes more important than individual skills.

Where to Find Candidates

The modern market offers three main options: freelancers, agencies, and in-house teams.

Each option has its own logic, risks, and speed.

- Freelancers. When a startup needs to scale quickly, freelancers are the natural choice. They are flexible, inexpensive, and available worldwide. But despite all their appeal, it is important to remember that freelancers rarely have long-term commitment. If a company is planning a stable process, it will need strict QA and constant communication.

- Agencies and partners. For medium and large companies, it is more profitable to hire a data annotator through a specialized agency. Such partners already have trained teams, quality standards, and experience in project management. This is especially convenient if you need to quickly assemble a team for a specific domain. See our tips on how to evaluate a data annotation service vendor.

- In-house teams. Some companies choose full control and create their own teams. This solution is more expensive but ensures confidentiality and a high level of quality. In long-term projects, the in-house model often proves to be more profitable, especially if the team works with sensitive or closed data.

The choice depends on the maturity of the project and its budget — but in any case, it is important to remember: the right team is more expensive than just a pair of working hands.

How to Evaluate Candidates

Even the best resumes will not tell you how a person will behave in a real annotation. Therefore, the question of how to hire data annotator requires a practical approach.

- Test assignments. This is where you can see how attentive the candidate is to detail, how well they can read guidelines, and how logically they can think. One well-designed test can replace dozens of interviews.

- Trial period. True quality is revealed in routine work. The first two weeks will show how consistent the annotator is, how quickly they respond to corrections, and how well they follow instructions.

- Accuracy metrics. For objectivity, companies introduce accuracy benchmarks — internal standards for evaluating annotations. If a candidate consistently maintains a high percentage of matches with the benchmark, they can move on to permanent employment.

Experienced managers look not only at the numbers, but also at how a person responds to feedback. A true professional does not defend themselves, but asks, “Why is this a mistake?” and “How can I do better?”

Cultural Fit and Teamwork

Good annotation is not only about accuracy, but also about atmosphere. In large projects, dozens of annotators work in parallel, and if there is no mutual understanding between them, the quality of the data quickly declines.

Therefore, when choosing the right data annotation team, it is important to consider the cultural and communicative context:

- common language and time zone;

- communication habits (chat, calls, stand-ups);

- willingness to help each other.

Teams where annotators feel part of a common task work more consistently and respond faster to changes. One of the project managers at Tinkogroup recalls: “When we started holding short 15-minute meetings in the morning, the error rate dropped by 20%. People just started talking to each other.” This “human synchronization” is not written in the guidelines, but it is what makes the team alive and effective.

Building and Managing a Data Annotation Team

Creating and managing a team of annotators is not just a hiring process. It is a balance between the human factor, technology, and economics. Every decision — where to locate the team, what tools to use, how to evaluate the results — directly affects the quality of the final data and, consequently, the success of the ML model.

In-house vs. Outsourcing: Pros and Cons

Choosing between in-house and outsourced data labeling is one of the first strategic steps. There is no universal answer here. It all depends on the tasks, budget, and pace at which the company plans to grow. Following is a comparison of in-house vs outsourced data labeling.

- In-house team. This is the path to maximum control. Having your own annotators is an investment in long-term stability. They know the product, the data context, and the characteristics of the customers. Communication is easier, feedback is faster, and confidentiality is higher. But the price is time and resources. Training, management, technical infrastructure, and HR processes are required. This format is justified if the project is strategic and the data is sensitive (e.g., medical images, financial documents, or personal user data).

- Outsourcing. In the early stages of a startup or when scaling is necessary, this is a lifesaver. By working with an external partner, the company gains access to a trained team and a ready-made ecosystem of tools. A reliable data annotation partner for ML relieves the business of the operational burden, especially when you need to build a scalable annotation pipeline. The downside is the potential challenges in choosing a data annotator related to quality, response time, or cultural differences. Therefore, it is important to choose a partner with a transparent QA system, reviews, and a proven reputation.

Many companies ultimately opt for a hybrid model: part of the team works in-house, and part works through an external provider. This achieves flexibility: permanent employees are responsible for quality and standards, while the partner helps during peak loads.

Balancing Cost, Quality, and Scalability

Managing a team of annotators is like balancing three points: cost, quality, and scale. The optimal balance depends on the maturity of the project.

- Cost. Cheaper is not always better. Saving on labor or tools often results in double the cost of fixing mistakes. Professional managers follow a different logic: “It’s better to pay for accuracy once than three times for chaos.”

- Quality. It directly depends on training and control processes. A peer review system and automatic verification against a reference markup help here. It is important not only to track errors, but also to analyze their causes — for example, unclear instructions or lack of feedback.

- Scalability. As a project grows, data processing speed becomes critical. But you can only scale what already works reliably. Experienced managers first create a “core” of reliable annotators, debug QA processes and communication, and only then scale up.

Reliable Data Services Delivered By Experts

We help you scale faster by doing the data work right - the first time

Tools and Platforms that Make Management Easier

Without the right tools, even the best team loses efficiency. Today, there are many platforms that help manage data annotation, from open-source solutions to enterprise SaaS.

For task management: ClickUp, Asana, Jira — allow you to monitor progress and deadlines.

For annotation: Label Studio, SuperAnnotate, CVAT, Amazon SageMaker Ground Truth — each has its own advantages depending on the type of data (text, image, video, audio).

For quality control: Integration of automatic metrics and peer review systems. Reports on errors and cognitive load of annotators. Companies that work with partners like Tinkogroup get access to comprehensive monitoring tools — from performance dashboards to AI analytics that predict errors before they happen.

Monitoring Performance and Ensuring Quality

A manager’s true skill is not in hiring, but in maintaining quality over months or years.

This requires a systematic approach: transparent metrics, team involvement, and open communication.

- Metrics:

- accuracy;

- consistency;

- speed;

- review score.

- Processes:

- weekly QA reports;

- two-way feedback — annotator ↔ manager;

- minimum bureaucracy, maximum trust.

People who feel that their work affects the quality of the product don’t just mark up data — they become part of the ML ecosystem.In one of Tinkogroup’s projects, the manager recalls:

“After we started showing annotators the actual results of model training on their data, they began to approach labeling with a completely different level of responsibility.” This is how professional pride is born, and with it — stability and accuracy.

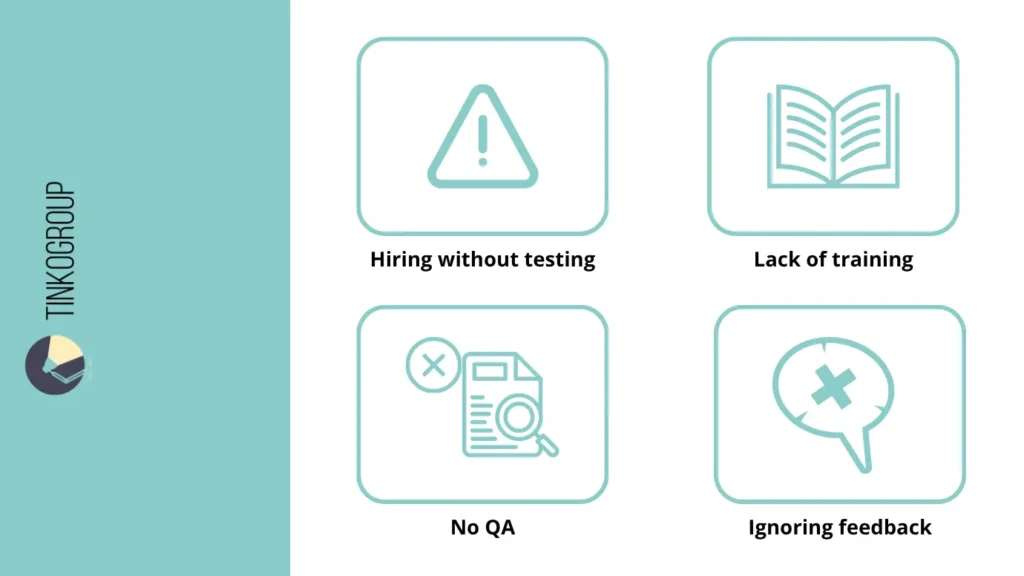

Common Mistakes to Avoid

To avoid typical challenges in choosing a data annotator, avoid these mistakes:

- Hiring without testing. Don’t hire without testing — check attentiveness and accuracy in advance.

- Lack of proper training. Without training, annotators make more mistakes. Implement a clear data annotation training process and explain the rules with examples..

- No QA system in place. Without quality control, even an experienced team will make mistakes. Use peer review and regular checks.

- Ignoring annotator feedback. Annotators are the first to spot problems. Listen to them — it helps improve guidelines and data quality.

Conclusion

Creating high-quality datasets for AI/ML projects is not just about technology, but also about people. The right specialists + well-designed training (how to train data annotators) = accurate, stable, and useful data. Any mistake along the way will affect the model, so it is worth investing in processes and the team in advance.

If you are looking for a reliable data annotation partner for ML who can help you choose a data annotator, train your team, and organize quality control, Tinkogroup is a proven partner.

Working with them provides:

- selection of specialists with the necessary data annotation skills;

- an effective data annotation training process;

- flexible management of in-house and outsourced teams;

- a QA and feedback system for consistent results.

Tinkogroup helps companies build teams where each annotation reflects an accurate understanding of the data, and your models grow without errors. Choose the right people and the right training, and your ML projects will be successful.

How to choose a data annotator for a long-term project?

Focus on candidates who demonstrate high attention to detail through test assignments and show a basic understanding of AI/ML context to ensure they can handle complex data scenarios.

What are the main risks of skipping the training process?

Without proper training and clear guidelines, annotators are more likely to produce inconsistent results and “noise,” which can lead to costly model errors and the need for expensive data rework.

Is it better to hire an in-house team or an agency?

It depends on your priorities: an in-house team offers maximum control and security for sensitive data, while an agency provides better scalability and access to pre-trained specialists for faster project growth.