The phrase “AI models are only as good as their training data” has been relevant for decades. But in recent years, this statement has become especially pressing: the number of projects is growing, budgets are increasing, and failures are increasingly linked not to algorithms but to the quality of the source data.

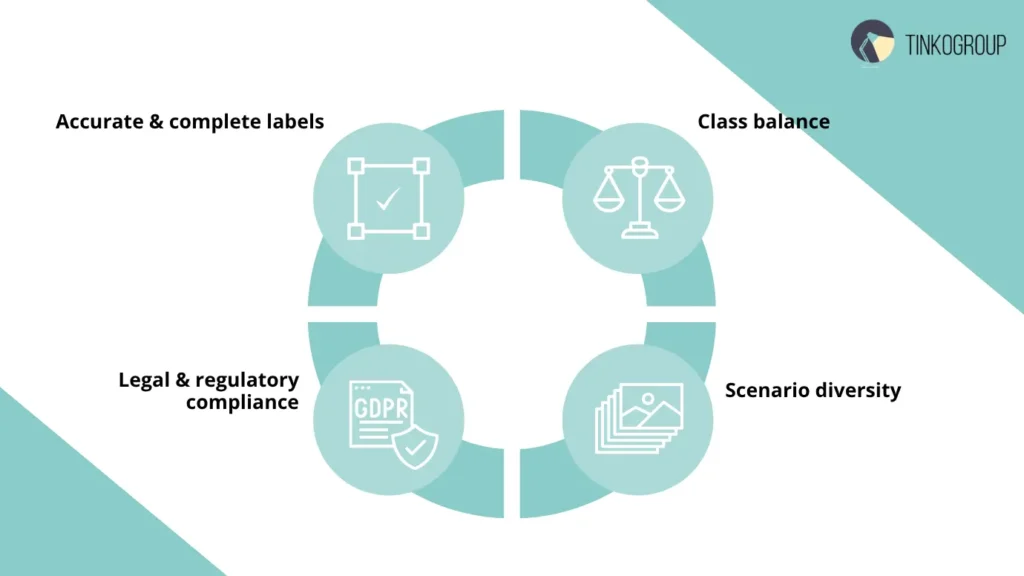

When it comes to dataset quality, many people think only about sample size. In practice, however, annotation accuracy, class balance, scenario diversity, and even legal purity are more important. The same principle applies to operational data outside of AI — for example, in eCommerce, where maintaining catalog consistency depends on consistent Shopify product uploads rather than ad-hoc or manual processes.

If these parameters are violated, hidden annotation problems undermine results, and businesses face failure during the implementation phase.

It’s telling that even large companies investing millions in development can fail due to poorly annotated data. This risk has become systemic and can no longer be ignored.

What Makes a High-Quality Dataset?

To understand why annotation errors are so devastating, it’s important to first define the characteristics that distinguish a truly high-quality dataset. Dataset quality refers not only to the accuracy of labeling but also to consistency: balance, completeness, diversity, and compliance with industry guidelines.

Annotation Accuracy, Consistency, Completeness

Annotation accuracy is a fundamental criterion. If an object is labeled incorrectly, the model learns from distorted information. However, accuracy alone is not enough: annotation consistency, that is, the consistency of approaches across different annotators, is also important. When two specialists interpret the same object differently, the resulting ground truth dataset quality becomes fragmented. Completeness is another critical aspect. Missing objects or attributes create “blind spots” that prevent the algorithm from correctly responding to new data.

In practice, this is where hidden risks most often arise. In a road sign recognition project, for example, annotators systematically cropped the edges of objects when marking bounding boxes. To humans, this seemed like an insignificant error. However the model began to interpret the road sign as a truncated object and lost accuracy in real images. This is a clear example of how even a small deviation undermines AI model accuracy.

Balanced Class Representation

The second key parameter is balance. A dataset in which 85% of images contain cats and only 15% dogs creates an obvious bias: the model will guess the more common class and fail on the rarer one. This is not just an academic problem. In commercial projects, such imbalance leads to a drop in AI model accuracy by tens of percent and a loss of user confidence.

Therefore, dataset preparation should include monitoring the class distribution and, if necessary, artificial data augmentation or additional annotation of missing categories.

Dataset Diversity

The third characteristic is dataset diversity in AI. Real-world data is never the same: lighting changes, angles differ, and rare or borderline scenarios occur. If a dataset doesn’t capture these variations, the project becomes vulnerable to unexpected situations.

For example, a computer vision system trained only on daytime images sharply loses performance at night. Adding nighttime images to the dataset immediately improves performance, demonstrating the value of diversity.

Compliance with Standards

The fourth criterion is compliance with industry standards and regulations. Dataset quality cannot be considered outside of a legal and ethical context. In healthcare, ignoring HIPAA or GDPR when processing data can lead to multimillion-dollar fines and a ban on model use. In financial services, violating KYC/AML regulations can lead to license revocation.

That’s why a high-quality dataset requires not only accurate and complete labeling but also compliance with all formal requirements. Standardization and process transparency increase confidence in the results and reduce business risks.

Common Annotation Problems That Hurt AI Models

Having understood what determines high dataset quality, we can move on to the downside. In practice, most failures are due to recurring annotation problems. These arise across different projects and directly undermine the effectiveness of models.

Mislabeling and Missing Labels

Labeling errors or missing labels are the most basic and, at the same time, the most damaging. In small samples, they appear as random anomalies, but in large-scale projects, they create systematic biases.

For example, if some images of cats are mistakenly labeled as dogs, the model begins to recognize non-existent features and produces false results. Missing labels are even worse: the object is effectively ignored, and the algorithm never learns of its existence. In medical projects, such omissions can mean that the model “miss[es]” a tumor in an image because that area was never labeled.

Inconsistent Class Definitions

The second category of problems is inconsistent definitions. Different annotators use different names or rules for the same object. Even small discrepancies lead to class blurring.

If one specialist uses the class “car” and another “vehicle,” the system sees them as two different concepts. Without a strict terminology guide, annotation consistency breaks down, and the final annotation QA process becomes a formality. As a result, the model doesn’t learn to distinguish between real classes, but gets confused by conflicting signals.

Incorrect Bounding Boxes, Polygons, or Masks

Geometric errors seem minor, but they often undermine the ground truth of the dataset. A bounding box misaligned by a few pixels, or a polygon that “cuts off” part of an object, create distorted training examples.

In detection tasks, such defects lead to the algorithm capturing only part of the object and failing to see the whole object. In reality, this results in a drop in AI model accuracy on test data. For example, in pedestrian recognition projects, incorrect masks created the illusion that people were missing limbs. As a result, the model was unable to correctly recognize people in motion.

Overuse of Automated Pre-Labeling Without Review

Automated pre-labeling speeds up processes, but its blind use is a source of widespread errors. Pre-labeling algorithms are trained on limited data and often replicate existing biases. If their results are not manually verified, these biases are copied and ingrained in the final dataset.

For example, in a document recognition project, the auto-labeling system mistakenly labeled logos as text blocks. Since verification was disabled for the sake of speed, thousands of errors made it into the final sample. As a result, the business received a model that “read” logos instead of text.

Lack of Domain Knowledge Among Annotators

The final critical factor is the lack of domain expertise. General-purpose annotators without specialized training can handle simple tasks, but in niche projects, this is a recipe for failure.

Medical labeling without the participation of doctors or agricultural data without agronomists lead to fundamental biases. The model learns from false information and becomes inapplicable in real life. Human-in-the-loop annotation plays a key role here: experts can spot errors that remain invisible to non-experts.

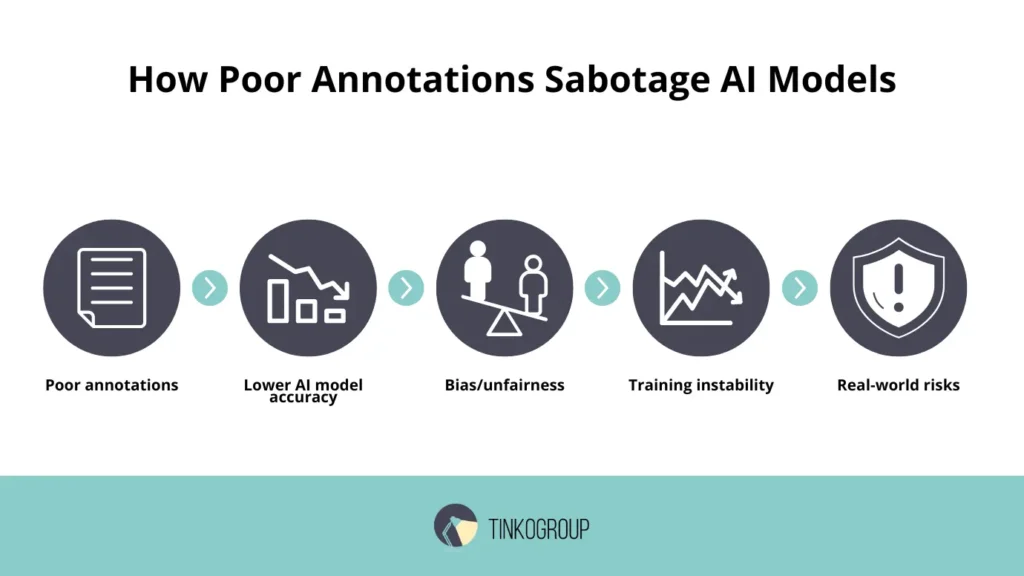

How Poor Annotations Sabotage AI Models

Annotation errors are not just cosmetic flaws, but a systemic threat that impacts the performance of algorithms and the ultimate value of a product. The consequences manifest themselves at all levels, from a drop in metrics to direct risks to human life.

Drops in AI Model Accuracy (precision, recall, F1 score)

The first and most obvious consequence is a drop in model quality, measured through AI model accuracy, precision, recall, and F1 score. Label errors lead to an increase in false positives and false negatives.

For example, in medical diagnostics, with 5% of erroneous annotations on X-ray images, precision can drop by 20-30%. This means the model begins to produce too many “unnecessary” diagnoses and also misses real pathologies. In industrial quality control, such errors mean that defective parts are inspected, while healthy ones are rejected. Thus, low dataset quality instantly turns an expensive model into an unusable tool.

Propagation of Bias and Unfairness

The second problem is the perpetuation and scaling of bias. A lack of dataset diversity in AI leads to errors propagating throughout the system.

A well-known example: a 2018 MIT study found that facial recognition algorithms performed significantly worse at identifying dark-skinned women than white men. The reason was not the model architecture, but poorly annotated data and unbalanced classes.

When such systems are implemented in real-world businesses, the consequences extend beyond statistics. In banking, this can lead to discrimination in lending, and in HR systems, to unfair candidate selection. Annotation errors perpetuate social biases and make the product legally vulnerable.

Training Instability and Longer Convergence

Bad data creates a “noisy training” effect. The model receives conflicting signals, gets stuck in local minima, and requires more epochs to converge.

In practice, this means increased training time and computing power costs. For companies, this translates into additional GPU/TPU costs and delays in bringing a product to market. For startups, such delays are critical: the window of opportunity may close, and competitors may gain an advantage.

Furthermore, unstable training reduces the reproducibility of experiments. A model trained with the same code and architecture, but with a slightly modified version of the dataset, produces radically different results. This makes systematic product improvement impossible.

Reliable Data Services Delivered By Experts

We help you scale faster by doing the data work right - the first time

Real-World Risks

The most serious consequence of annotation problems manifests itself in the real world, where model errors are costly.

- In medicine, incorrectly labeled data leads to false negative diagnoses. A missed tumor or unrecognized symptom threatens the patient’s health and life.

- In autonomous vehicles, misaligned bounding boxes mean the system fails to detect a pedestrian or cyclist. An error here can cost lives.

- In cybersecurity, mislabeled network events lead to attacks being classified as “benign,” creating a direct vulnerability for businesses.

It is at this level that it becomes clear: dataset quality is not an optional parameter, but the foundation of security, trust, and accountability in AI implementation.

Dataset Quality Assurance (QA)

Aware of the risks associated with poorly annotated data, companies are implementing systematic quality assurance processes. It’s important to understand that the annotation QA process is not a one-time check before model training. But an ongoing practice built into every stage of dataset preparation.

Annotation Review Workflows

The first step is to establish workflows for regular annotation review. Even the most experienced specialists begin to make mistakes after a while: fatigue, lapses in judgment, subjective interpretations.

Therefore, companies are building a pipeline where verification is integrated into the annotation process itself. This most often means:

- random samples of already annotated data are sent for independent re-checking;

- identified errors are classified (systemic or isolated);

- results are recorded in reports accessible to both annotators and project managers.

This approach reduces the likelihood that errors will accumulate and make their way into the final ground truth dataset quality.

Multi-Layer QA

A multi-layered QA system demonstrates maximum efficiency:

- Peer review is a cross-check. Annotators review each other’s work, which helps quickly identify obvious inconsistencies and establish a consistent style.

- Expert validation involves engaging specialists to review complex cases. For example, a doctor analyzes controversial medical images, or an engineer analyzes data on equipment components.

- Automated checks are scripts and tools that search for empty bounding boxes, duplicate images, invalid classes, or file structure violations. These checks don’t replace human review, but they eliminate routine work.

This combination allows for a balance between speed and accuracy: automation detects technical failures, annotators resolve systemic inconsistencies, and experts ensure correctness on critical data.

Metrics: Inter-Annotator Agreement, Error Rate, Annotation Coverage

Metrics are needed to measurably measure the process. Three key metrics are most commonly used:

- Inter-annotator agreement (IAA) is a measure of agreement between annotators. A high IAA indicates that the rules are applied consistently. A low IAA is a signal to revise the guides or require additional team training.

- Error rate is the percentage of errors in a given sample. This metric provides a clear understanding of the scale of the problem and helps compare the quality of work across teams or contractors.

- Annotation coverage is the completeness of annotation coverage. This metric shows how completely objects and attributes are reflected in the dataset. Low coverage indicates that the model is being trained on leaky data and will not perform adequately in real-world situations.

Regular monitoring of metrics helps transform QA from a subjective check into an objective quality management tool.

The Role of Human-in-the-Loop Annotation

Even the most advanced autolabeling and automated checking algorithms cannot replace human experts. This is why human-in-the-loop annotation is considered the gold standard.

The approach involves human intervention at critical moments: checking questionable cases, clarifying boundary examples, and adjusting the automatic pre-labeling. This symbiosis accelerates the process without sacrificing quality.

For example. In medical image labeling projects, AI can automatically highlight suspected tumor areas. But the final decision is always made by a physician. In agricultural applications, the algorithm marks areas with plants. So, an expert clarifies the exact location of weeds and crops.

Human-in-the-loop annotation helps maintain high dataset quality, especially in complex and demanding industries.

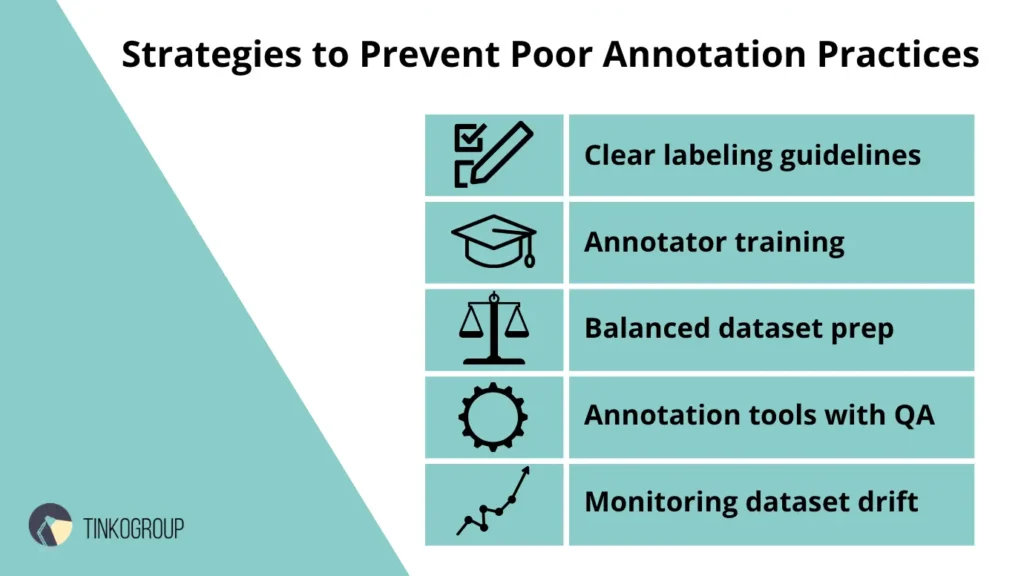

Strategies to Prevent Poor Annotation Practices

It’s important not only to correct errors, but also to prevent them.

Clear Labeling Guidelines

Annotators should work according to a single guide. The more detailed the document, the higher the chance of maintaining annotation consistency.

Ongoing Annotator Training and Calibration

Even experienced specialists need training. Regular calibration sessions help maintain quality.

Balanced Dataset Preparation Across Classes and Conditions

This is not only about technical balancing but also about the business context. Therefore, dataset preparation must consider all classes, scenarios, and rare conditions.

Use of Annotation Tools with Built-In QA and Version Control

Modern tools include built-in checks and version control. They reduce the risk of errors and make the process more transparent.

Continuous Monitoring of Dataset Drift

Even a perfectly prepared dataset becomes outdated. Over time, data begins to drift, and quality declines. Therefore, continuous monitoring is a mandatory element of a mature pipeline.

Case Snapshot

Real-world cases provide good evidence.

In one e-commerce project, a model was designed to recognize products in photographs. While it demonstrated high metrics in testing, accuracy dropped by 40% in production. The audit revealed widespread errors in bounding boxes.

After implementing multi-level QA and retraining annotators, the metrics increased:

- mAP from 0.42 to 0.71;

- IoU from 0.38 to 0.65.

This is an example of how a systematic approach to data quality can literally save a project.

Conclusion

Thus, poorly annotated data undermines model performance at all levels: from metrics to real risks. Annotation errors mean not only technical difficulties but also direct financial losses.

Therefore, dataset quality monitoring is not optional, but a strategic priority. It’s important to build a systematic annotation QA process, invest in annotator training, and implement modern tools.

Companies that don’t want to spend millions on “garbage” data should turn to professionals. Tinkogroup helps clients improve the quality of annotations, build quality control processes, and improve model performance. Learn more on the Tinkogroup’s service page.

Is annotation accuracy the only metric for dataset quality?

No. While accuracy is fundamental, a truly high-quality dataset must also be balanced (equal representation of classes), diverse (covering different scenarios and lighting conditions), and compliant with legal standards like GDPR or HIPAA.

How exactly does poor annotation impact AI models?

Poor annotations cause a chain reaction: they lower metrics (precision, recall, F1), increase training time due to “noisy” data, propagate biases, and can lead to critical failures in real-world applications (e.g., in healthcare or autonomous driving).

Can we rely solely on automated labeling to save costs?

Not entirely. Automated labeling often replicates existing biases and misses edge cases. The “Human-in-the-loop” approach is essential, where human experts validate complex scenarios to ensure the ground truth is actually correct.