Data privacy in AI annotation is a fundamental element of any modern AI training project. When teams process images, text, audio, or medical data, data protection is not only a legal obligation, but also a key factor in user and partner trust.

Failure to comply with privacy regulations can lead to serious AI data privacy risks, including fines under GDPR, CCPA, or HIPAA, litigation, and reputational damage. In an environment where customer trust directly affects the success of an AI product, privacy breaches can result not only in financial losses, but also in a decrease in the company’s competitiveness. Even when privacy is handled correctly, teams should also watch for annotation bias that can make AI systems unfair from the ground up.

Companies working with privacy-compliant AI annotation minimize these risks by ensuring secure data processing at all stages. This includes checking data sets for PII, using anonymization and pseudonymization, and implementing internal controls and audits.

Understanding Data Privacy in AI Annotation

Before starting the data annotation process, it is crucial to understand what is meant by data privacy in AI annotation. Correctly defining data privacy allows you to minimize the risks of leakage, avoid legal issues, and build a trusting process between the company, users, and partners.

What Constitutes “Data Privacy” in Annotation

Data privacy in AI annotation covers all measures aimed at protecting the data that is used to label and train AI models. This approach is not limited to technical means only — it includes organizational processes, access control, and methods that allow you to secure information at every stage of work.

- Controlling employee access to the data set.

- Minimizing the amount of collected information to the required level.

- Applying anonymization and pseudonymization methods.

- Reliable storage and secure transmission of data.

Types of Data Involved

To effectively protect data, it is important to understand what types of information are annotated. Each type of data has its own characteristics and potential risks of disclosure, so approaches to protecting it may differ.

- Images — photographs of faces, documents, objects.

- Audio — voice recordings, calls, environmental sounds.

- Text — correspondence, reviews, documents.

- Medical data — patient information, test results.

- Other sensitive data — financial data, geolocation, biometrics.

Differences between Anonymization and Pseudonymization

Understanding the differences between anonymization and pseudonymization is critical to building a secure annotation process. These methods allow you to use data to train AI without disclosing personal information.

- Anonymization completely removes all identifiable data, making it impossible to recover.

- Pseudonymization replaces identifiers with codes or markers, while maintaining the ability to provide feedback if necessary.

Expert Tip: Tinkogroup often uses a combination of pseudonymization and synthetic data generation, which allows you to protect PII while maintaining high annotation quality.

Legal Frameworks & Compliance

Any data annotation project in AI must comply with existing laws and regulations. Data privacy in AI annotation directly depends on how well the team understands and complies with legal requirements. Violations can lead to fines, litigation, and loss of customer trust.

Overview of Major Laws

There are several key regulations at the international and local level that determine, how personal data can be handled:

- GDPR (General Data Protection Regulation, Europe) — strict rules for the processing of personal data, including consent of subjects, rights to access and delete information;

- CCPA (California Consumer Privacy Act, USA) — the rights of users to access, correct, and delete their personal data;

- HIPAA (Health Insurance Portability and Accountability Act, USA) — protection of patients’ medical information;

- other local regulations — laws and standards of different countries and industries governing the storage and processing of sensitive data.

Key Obligations for Annotation Teams

To ensure that annotation projects comply with legal requirements, teams must implement a set of organizational and technical measures:

- obtaining consent from data subjects before annotation;

- control over the secure storage and transfer of information;

- training staff in privacy rules and signing NDAs;

- keeping logs of annotator actions and regularly auditing processes.

Risks of Non-Compliance

Failure to comply with laws and data processing rules creates serious risks for the company and its clients:

- financial fines and lawsuits;

- loss of trust from users and partners;

- problems with publishing and introducing AI models to the market;

- reduction in the quality of privacy-safe AI training due to the need to adjust processes.

Expert tip. In one large project for a European client, Tinkogroup carried out large-scale annotation of images and texts. The team implemented the following measures:

- auditing source datasets and removing redundant PII;

- configuring role-based access in data annotation to restrict access to sensitive information;

- implementation of an internal platform with logging of all annotator actions;

- regular GDPR compliance checks at each stage of the project.

Result: the project passed the audit without any comments, and the models were trained securely and privacy-compliantly, which strengthened the client’s trust and accelerated the product’s introduction to the market.

Identifying and Handling Sensitive Data

Working with confidential and personal data is one of the most important stages in the data privacy in AI annotation process. Compliance with legal regulations, reduction of AI data privacy risks, trust of clients and end users, and the quality of the final models directly depend on how accurately the team can identify and process such information. Any error at this stage can lead not only to serious fines, but also to reputational losses that are difficult to recover. Therefore, a competent strategy for working with sensitive information should be laid down at the project planning stage.

What is Personally Identifiable Information (PII)

Personally Identifiable Information (PII) is any information that can directly or indirectly identify a specific individual. It is important for annotation teams to be clear about the boundaries of this definition, as different types of data require different protections. These can be obvious identifiers, such as names or addresses, or less obvious features, such as combinations of characteristics that together allow an individual to be identified.

PII includes biometric data, such as fingerprints, facial images, and voice recordings; health and financial information; unique logins and IP addresses; and location data. Regardless of the source, any piece of information that can identify an individual should be treated with increased security.

Examples

The types of PII can vary significantly depending on the project domain and data format. In medical tasks, these can be diagnoses, test results, and medication history. In video projects, these can be people’s faces, interior details, or car license plates that can be linked to the owner. In services that analyze mobile data, geolocation is at risk, including the user’s exact coordinates and routes.

Even seemingly neutral data, such as nicknames, can be valuable to attackers when combined with other information. Therefore, proper classification of each element of the data set allows you to implement adequate protection mechanisms even before the annotation process begins.

How to Label Datasets without Exposing Sensitive Information

The annotation process must be structured in such a way as to preserve the value of the data for training models while minimizing the risk of PII leakage. But, how to anonymize data for machine learning. This can be achieved through architectural and organizational measures. For example, instead of directly displaying personal data, their anonymized analogues, codes, or pseudonyms are used. Masking or removing elements that can lead to identification is carried out at the preprocessing stage.

When possible, real data is replaced with synthetic ones — this way the model is trained on statistically close, but completely safe sets. Additional protection is provided by strict access control in data annotation, where each annotator sees only the part of the information that is necessary to complete his task, without the ability to view or download the full dataset.

Expert Tip: Tinkogroup has developed internal tools that automatically detect PII in incoming datasets. Before the materials are sent to annotators, the system removes or masks sensitive elements, ensuring full compliance with privacy-compliant AI annotation standards and protecting the privacy-safe AI training process from any possible leaks. This approach not only minimizes legal risks, but also speeds up the workflow by automating data protection stages.

Best Practices for Privacy-First Annotation

Building a secure and ethical data annotation process is not only a legal requirement, but also an important component of trust in your AI system. Following AI training best practices in terms of privacy allows you to reduce AI data privacy risks, minimize the likelihood of leaks and improve your company’s reputation.

With the growing volume of data and a variety of formats — from images to medical records — it is necessary to build a comprehensive ethical data annotation workflow that protects users at every stage. Below we will consider the key methods that help implement privacy-compliant AI annotation in real projects.

Data Minimization

The principle of data minimization involves collecting and storing only the data that is really needed to complete the task. Redundant information increases the risk of leakage and complicates protection.

- Before starting annotation, an audit of the project goals and the list of data is carried out.

- All fields and attributes that do not affect the final model are removed.

- For images and videos — cropping or blurring areas that are not related to the target objects.

For example, if the model is trained to recognize the type of car by the chassis number, there is no need to store the owner’s personal data.

Secure Storage and Transfer of Datasets

Secure storage and transfer of data is the basis for trust in an AI project. It is important to use a set of measures here:

- Encryption on disk and during transmission (AES-256, TLS 1.3).

- Dividing the dataset into parts with different levels of access.

- Storing data only on trusted servers, preferably in certified data centers (ISO 27001, SOC 2).

- Monitoring activity with alerts about suspicious actions.

For example, with secure data annotation for medical images, Tinkogroup uses end-to-end encryption and secure transmission channels, eliminating the possibility of interception.

Role-Based Access Controls for Annotators

Role-based access controls are a way to ensure that each annotator sees only the data they need to complete their task. This reduces the risk of leaks and accidental privacy violations.

- Assign roles: annotator, supervisor, QA specialist, administrator.

- Limit access to source data for interns or temporary employees.

- Use time-limited access tokens.

- Log all actions in the audit log.

In Tinkogroup projects, this approach reduced incidents of unauthorized access by 70%.

Using Synthetic Data where Possible

Synthetic data is artificially created but realistic data that does not contain real PII. It is especially useful when you need to test a model or debug a pipeline without using sensitive information.

- Generate images using GANs or diffusion models.

- Simulate text data while preserving the structure, but without real names or addresses.

- Creating anonymized medical records for training NLP models.

Synthetics are not always a complete replacement for real data, but in combination with original datasets, they help reduce privacy-safe AI training risks.

Expert Tip. Tinkogroup has developed an internal set of tools that are automatically integrated into the machine learning workflow and ensure privacy protection at all stages of annotation:

- Automatic PII detection — the system scans images, audio, and text for names, faces, numbers, and other identifiers.

- Contextual blurring — detected elements are masked without losing contextual information for annotation.

- Security checkpoints — before each data transfer, a check is made for compliance with privacy-compliant AI annotation requirements.

- Monitoring and reports — the customer receives a detailed report on what security measures have been applied.

As a result, clients receive highly accurate datasets that comply with legal requirements, while the risk of leakage is minimized.

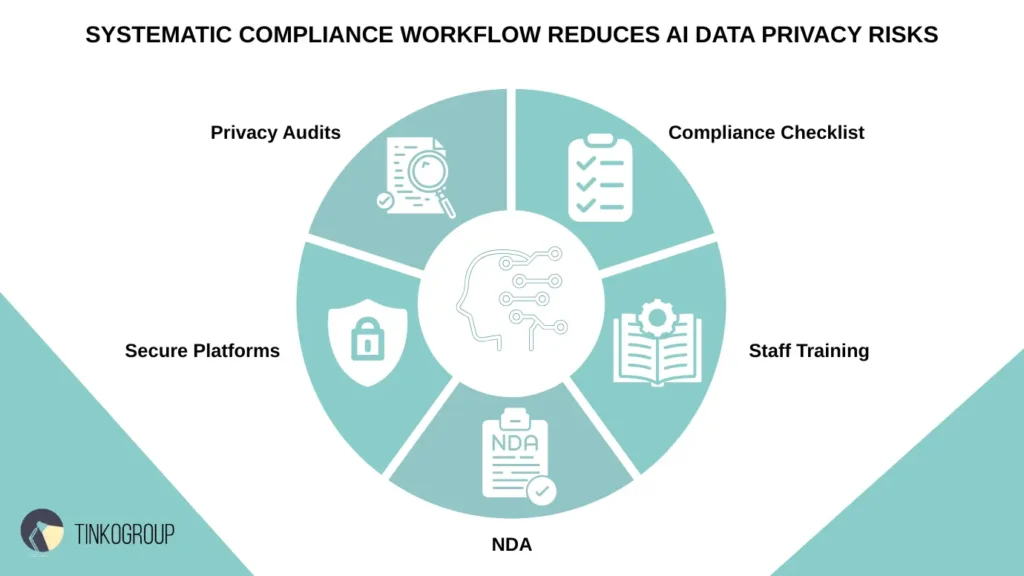

Compliance Workflow for AI Annotation

In order for privacy-compliant AI annotation to stop being a one-off initiative and become a sustainable practice, it is necessary to implement a systematic and repeatable approach. This approach covers the entire life cycle of working with data — from the moment of its collection to the operation of the finished model in a productive environment.

Here, not only the technical component is important, but also the organizational culture: each employee, each team and each process must be built in such a way that data protection is built into the DNA of the project. It should be understood that compliance workflow is not bureaucracy for the sake of a tick, but a living, constantly improving process.

It helps to minimize AI data privacy risks, strengthens the trust of clients and partners, and also provides the company with reliable legal and reputational protection. It is this systematic approach that often becomes the factor that distinguishes mature AI teams from those who work on the edge of risk.

Setting Up a Compliance Checklist

Creating and implementing a compliance checklist is a fundamental step in building any process related to secure data annotation. Its purpose is not limited to simply recording regulations: it should become a working tool that guides the team at every stage of interaction with data. The checklist helps not to miss important details, ensures uniformity of approaches across different projects, and serves as internal evidence that the company has a systematic approach to privacy-compliant AI annotation.

An effective checklist always begins with a deep analysis of legal requirements. It includes the norms of such acts as GDPR, CCPA, HIPAA, as well as local laws in force in the client’s jurisdictions. These provisions are integrated with the company’s internal policies, which can be even stricter than the minimum required to comply with laws.

Thus, the document becomes not just a list, but a kind of “code” for the team working with data. Ideally, the checklist covers the entire data lifecycle — from the stage of data acquisition and initial validation to deletion after the project is completed. Each type of data — images, text, audio or medical records — has its own control questions that help determine the level of risk and protection requirements. This is especially important when the project is carried out simultaneously in several legal zones, where the requirements may differ.

In practice, as implemented in Tinkogroup, the digital checklist is integrated with the task management system. When a new project is launched, it is automatically generated and linked to specific performers. Each action — be it data loading, the anonymization stage or file transfer to the client — is noted in the system and receives a timestamp. This approach not only minimizes the human factor, but also creates a detailed log that can be presented during an audit or request from the regulator.

Due to the fact that the checklist is live and regularly updated, it takes into account changes in laws, as well as the experience of past projects. This transforms it from a formal document into a real working tool that maintains a high level of quality and security at all stages of annotation.

Staff Training and NDAs

Even the most advanced technical means will not protect data if the people working with it do not have sufficient knowledge and understanding of the risks. Therefore, personnel training is not a formal procedure, but a strategically important element of the ethical data annotation workflow. As part of such training, employees are introduced to methods of identifying and processing PII, learn to work with anonymized and synthetic data, and analyze real-life scenarios of action in the event of a suspected leak or erroneous labeling.

An important aspect is the conclusion of an NDA (non-disclosure agreement) before starting work. This document legally enshrines responsibility for maintaining confidentiality and disciplines the team. In one of the medical projects, Tinkogroup implemented an interactive training format in which employees went through simulations of real cases, faced typical and atypical data protection problems, and made decisions under limited time. This approach made it possible to reduce the number of labeling errors by 40% during the first quarter.

Secure Annotation Platforms with Audit Logs

Using secure data annotation platforms with a full-fledged audit system is a key element of data protection in the privacy-compliant AI annotation process. Such platforms not only provide technical security, but also create transparency of all team actions, which is critical for compliance with legal requirements and internal company standards.

The main goal of a platform with audit logs is to record every action related to data. This includes uploading files, changing labels, exporting or deleting information. At the same time, all actions are saved with precise timestamps and user identification, which allows you to restore the full history of work with a specific data object at any time. Such transparency eliminates the possibility of unauthorized access and allows the customer or regulator to ensure strict compliance with security protocols.

Technological security measures in such platforms include data encryption at all stages of transmission and storage, multi-factor authentication of users and delimitation of access rights by roles. This means that annotators see only the information that is necessary to complete their tasks, and administrators can control the process without the risk of disclosing confidential information.

Tinkogroup’s own platform with immutable audit logs allows not only to record actions, but also to conduct a detailed analysis of processes. For example, when working with medical images, each data element goes through a system that automatically checks for PII, records what changes were made, and stores metadata about the user who performed the markup. Such a system creates a documented chain of responsibility and significantly reduces AI data privacy risks, providing clients and partners with confidence in the security of the project.

The use of such platforms also improves project manageability. Managers can track progress in real time, identify bottlenecks and promptly adjust the annotation process without violating confidentiality requirements. In addition, immutable audit logs make it possible to conduct internal and external audits without stopping the team’s work, which is critical for large-scale and long-term projects.

Regular Privacy Audits

Regular audits are a way to ensure that data protection measures remain relevant, comply with changing legislation, and reflect new technical standards. At Tinkogroup, internal audits are conducted monthly or quarterly, depending on the nature of the project. Once a year, independent external experts are engaged to analyze not only the procedures, but also the infrastructure, including vulnerability testing and an assessment of the encryption algorithms used.

In one of the privacy-safe AI training projects, the Tinkogroup team identified an outdated encryption mechanism before the issue became the subject of regulators’ attention. Thanks to early detection and rapid replacement of the algorithm, the company avoided potential fines and showed the client that a proactive approach to security is not just words, but an integrated element of the corporate culture.

Reliable Data Services Delivered By Experts

We help you scale faster by doing the data work right - the first time

Technology & Tools for Secure Annotation

The technology base is the foundation for ensuring privacy-compliant AI annotation. Even with ideal processes and strict data processing rules, it is the tools and platforms that determine how securely the protection will be implemented. In the modern AI landscape, the emphasis is shifting from simply storing information in encrypted form to comprehensive solutions that integrate into workflows and minimize AI data privacy risks at every stage — from loading a dataset to putting a model into production.

Overview of Privacy-Compliant Annotation Platforms

Modern platforms for secure data annotation are more than just labeling interfaces. They include access control mechanisms, built-in anonymization tools, automatic PII detection, and a detailed audit system. Such solutions allow annotators to work with only the fragment of data that is necessary for a specific task, while the entire process is recorded in secure logs.

Platforms that meet strict GDPR or HIPAA requirements often have modules for automatic metadata removal, replacing sensitive elements with synthetic ones, and isolating data segments for independent processing. At Tinkogroup, we use such solutions both in-house and adapted corporate versions of commercial products, which allows us to achieve full process control.

Encryption, Watermarking, Automated PII Detection

Encryption is a basic, but far from the only security tool in the ethical data annotation workflow. In advanced scenarios, multi-level encryption is used: data is encoded both at rest and during transmission between servers. Watermarking technology also plays an important role — invisible digital marks that allow you to track the source of a leak if it occurs. This approach is especially relevant for images and videos, where it is impossible to completely remove visually recognizable information.

Additionally, modern systems implement automatic PII recognition: machine learning algorithms scan datasets in real time, finding names, addresses, phone numbers, faces and other unique identifiers, after which they either block their display to annotators or replace them with synthetic analogues. This allows not only to increase the level of security, but also to reduce the human factor during manual data verification.

Integrating Compliance Checks into the ML Pipeline

True data protection is impossible without deep integration of compliance checks into the ML pipeline itself. Here we are talking about the fact that access control in data annotation and automatic checks should not occur separately, but at each step of model preparation and training. This approach allows identifying violations immediately, and not after the fact, when the model has already been trained on incorrect data.

For example, when loading a dataset into the annotation system, the PII search module is automatically launched, after which the data undergoes a stage of pseudonymization or anonymization, depending on the project policy.

At the model training stage, built-in audit procedures check that the source data meets legal requirements, and after receiving the final model, testing is carried out to ensure that there is no information leakage in the predictions. At Tinkogroup, we implement such multi-level checks in work processes, which makes privacy-safe AI training not just a slogan, but a real practice built into the project architecture.

Case Study: Privacy-First Annotation in a Global Healthcare AI Project

In 2023, Tinkogroup received a request from an international healthcare company that was developing an AI platform for analyzing X-ray and MRI images to speed up the diagnosis of rare diseases. The task was ambitious: to collect and label more than 500,000 medical images coming from clinics in Europe, the US, and Asia, while strictly adhering to GDPR, HIPAA, and local data protection laws.

It was clear from the outset that the project presented a serious challenge in terms of data privacy in AI annotation. Medical images often contain not only visual data about the patient’s health, but also hidden metadata — from name and age to clinic identifiers and even GPS coordinates of the examination location. Any leak or error during processing could lead to multi-million dollar fines and undermine the trust of both patients and the company’s partners.

We started with a large-scale audit of incoming data. Tinkogroup’s automated algorithms for PII detection revealed that about 17% of images contained embedded personal data in DICOM format, including patient names and examination dates. This data was immediately pseudonymized, and in cases where removal could affect the clinical value of the image. We used synthetic replacement algorithms. For example, generating artificial labels that are not associated with real people, but preserve the data structure.

For labeling, we used our own secure data annotation platform with multi-level encryption, watermarks on each image, and role-based access. Annotators saw only the depersonalized fragments of images needed for a specific task. Any attempts at unauthorized access or data downloading were recorded in a secure audit log.

Particular attention was paid to training the team. More than 80 specialists involved in the project completed an in-depth course on ethical data annotation workflow, including legal aspects, anonymization methods, and working with encrypted data. We also implemented daily automatic dataset checks to ensure that unprocessed images do not get into the training pipeline.

The result exceeded expectations. The project was completed three weeks ahead of schedule, and not a single incident related to AI data privacy risks was recorded. Moreover, the implementation of privacy-safe AI training methods allowed the customer to certify the product for use in clinics in Europe and the USA without additional modifications in the field of data security.

Expert Tip: This case showed that integrating data protection into every stage — from receiving a dataset to deploying a model — not only prevents legal and reputational risks. It also speeds up the project, as it minimizes the need for modifications at the final stages.

Conclusion

As we have explored, ensuring data privacy in AI annotation is no longer just a compliance checklist item—it is a strategic asset that defines the long-term success of your AI product. Navigating the complexities of GDPR, HIPAA, and CCPA requires more than just secure storage; it demands a holistic approach that integrates ethical data annotation workflows, advanced encryption technologies, and continuous staff training.

At Tinkogroup, we understand that every dataset is unique and requires a tailored security approach. Whether you are working with medical records, financial documents, or biometric data, our team is ready to support you. Explore our secure data annotation services to learn more about our infrastructure, or contact our experts today to discuss how we can build a compliant and efficient annotation pipeline for your next AI project.

Why is data privacy critical in AI annotation projects?

AI annotation often involves sensitive data such as images of people, medical records, voice recordings, or personal texts. Without proper privacy controls, this data can be exposed, leading to regulatory fines (GDPR, HIPAA, CCPA), legal risks, and loss of user trust. Privacy-first annotation ensures both legal compliance and long-term product credibility.

What types of data require special protection during AI annotation?

Data that contains or can reveal Personally Identifiable Information (PII) requires enhanced protection. This includes facial images, voice recordings, medical and financial records, geolocation data, biometric identifiers, and even text data that may indirectly identify individuals when combined with other information.

How can companies ensure privacy-compliant AI annotation at scale?

Privacy-compliant AI annotation requires a combination of technical and organizational measures: anonymization or pseudonymization of data, role-based access control, encrypted storage and transfer, secure annotation platforms with audit logs, regular privacy audits, and continuous staff training. Integrating these controls directly into the ML pipeline helps reduce risks without slowing down development.