AI development is rarely limited to just building a neural network. On the contrary, the foundation of successful projects lies in the data fed to the model. If the data is labeled incorrectly, no architecture can compensate for this gap.

Data labeling mistakes are a hidden pitfall that prevents models from achieving the required accuracy. Research shows that up to 80% of project time is spent not on algorithms, but on data preparation and annotation. If this stage is performed incorrectly, all subsequent steps become endless corrections and rework.

A systematic approach helps avoid problems. Careful attention tohttps://tinkogroup.com/data-annotation-quality-control-guide/ instructions, training annotators, and the proper use of automation, quality control, and metrics can improve AI model performance and reduce costs.

Below, we discuss seven key mistakes most commonly encountered in the industry and how to prevent them.

Unclear Labeling Guidelines

Unclear instructions for annotators are the first and most common cause of data chaos.

When rules are described superficially, different annotators begin to interpret the task differently. One person labels an object as a “chair,” another as an “armchair,” and a third as a “seat.” For a human, such differences are not critical, but for a model, they lead to discrepancies and errors.

Leads to Data Labeling Errors and Inconsistencies

Data labeling errors in such cases become systemic. When scaling a project, thousands of inconsistent labels end up in the training set. Over time, these inconsistencies compound into dataset quality problems that silently sabotage AI models, even when architectures and training pipelines are well designed. The model learns from inconsistent examples and exhibits low stability.

Furthermore, such errors are difficult to spot immediately. During pilot testing, everything may seem correct, but in industrial use, the model’s accuracy drops sharply.

Real-World Examples of Poor AI Data Labeling Practices

A good example is e-commerce projects. One company tried to train a model to distinguish between shoe categories. However, some annotators used the label “sneakers,” others “running shoes,” and still others “footwear.” As a result, product classification performed poorly, users received incorrect recommendations, and businesses lost conversions. This is a classic example of poor AI data labeling practices.

Similar examples occur in medicine. If the instructions don’t specify that medical reports be labeled according to a specific scheme, annotators choose their own labels. As a result, diagnoses and symptoms end up in different categories, which reduces the accuracy of the clinical decision support model.

Expert Tip: Keep Guidelines Visual, Iterative, and Updated

Visual instructions are the best solution. Screenshots, diagrams, and tables with examples of correct and incorrect labeling help reduce errors. It’s also important to update the document iteratively: the first batches of data often reveal errors that need to be corrected promptly.

Thus, clear and visual guidelines become the foundation of high-quality annotation.

Weak Annotator Training

Even perfect instructions will be ineffective if the annotators themselves don’t understand the task.

The industry still widely believes that annotation is a simple, routine task that can be delegated to anyone. In practice, however, without training, the results are mediocre.

How Annotator Training Mistakes Reduce Machine Learning Annotation Quality

Annotator training mistakes lead to decreased machine learning annotation quality. Untrained annotators make basic errors: they miss important details, ignore context, and misinterpret instructions.

For example, when labeling images for self-driving cars, untrained annotators may label road signs differently or completely fail to consider temporary obstacles, such as construction cones. As a result, the model “learns” to ignore important elements of the road environment, which creates a safety risk.

The Value of Onboarding, Calibration Tasks, and Feedback Loops

Good practice includes three stages:

- onboarding — explaining the project’s purpose, introducing tools, and demonstrating benchmark examples;

- calibration tasks — test tasks that help identify discrepancies in understanding instructions;

- feedback loops — regular feedback. Performers should know where they made mistakes and understand how to improve their results.

This system not only reduces errors but also speeds up work: trained annotators complete tasks faster and more confidently.

Blind Reliance on Automation

Automation has long been a part of the annotation field. Many companies use pre annotation and automated annotation to speed up processes. However, over-reliance on algorithms can backfire.

Dangers of Overusing Tools: Risks of Automated Pre-Labeling

When tools are used without supervision, the risks of automated pre-labeling become obvious. System errors propagate throughout the dataset. If an algorithm incorrectly identifies an object, annotators often fail to correct the label, trusting the tool.

One study on medical imaging showed that using only automated labeling resulted in error rates as high as 30%. This is unacceptable for clinical applications, where the cost of error is too high.

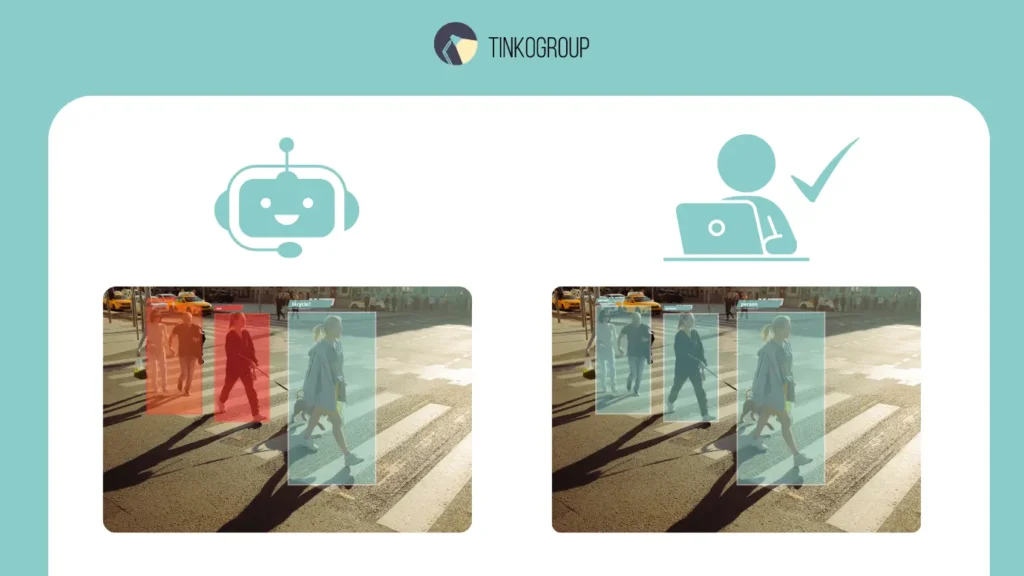

Why Automation Must Be Paired with Human-in-the-Loop Annotation

Automation must be combined with supervision. A human-in-the-loop (HIL) checks the data, corrects errors, and ensures that the system does not assign incorrect labels. Automated annotation speeds up the process, but without human review, it becomes a source of problems. Therefore, an effective approach is a combination of a machine assisting with routine tasks and a human reviewer reviewing critical data.

Lack of Dataset Diversity

The importance of data diversity is difficult to overstate. Models learn from what they are fed. If the dataset is limited, the model will reproduce its limitations.

Lack of diversity is one of the most dangerous data labeling mistakes, as it is often discovered after a product has been launched.

Risks of Ignoring Dataset Diversity in AI

Ignoring diversity leads to selection bias. Algorithms begin to work correctly only in narrow scenarios and fail when faced with non-standard data.

How Skewed Datasets Affect Model Generalization

Facial recognition is a prime example. Well-known studies have shown that systems trained primarily on images of people of one ethnicity demonstrated significantly lower accuracy when working with others. This has sparked public outcry and serious debate about AI ethics.

In healthcare, biases are also critical. If a model analyzes medical images of patients from only one region, it performs poorly in diagnostics in other settings. As a result, AI model performance drops sharply.

The solution lies in deliberately expanding the datasets, incorporating different scenarios, ethnic groups, imaging conditions, and other factors.

Reliable Data Services Delivered By Experts

We help you scale faster by doing the data work right - the first time

Non-Compliance and Security Issues

Working with data always comes with responsibility. Data labeling compliance is not a formality, but a mandatory requirement for AI projects.

Missing Data Labeling Compliance Leads to Legal and Ethical Risks

A lack of control over compliance leads to legal and ethical risks. Violating GDPR in Europe or HIPAA in the US threatens not only fines but also the loss of customer trust.

Special Focus: Healthcare, Finance, Government Datasets

The most vulnerable industries are healthcare, finance, and the public sector. In medicine, the disclosure of patient data can have catastrophic consequences. In finance, leaks of client information lead to millions in losses. In government projects, anonymization errors can threaten national security.

Companies must implement strict security processes: encryption, access control, and anonymization. Data labeling compliance is becoming not an option, but a required standard.

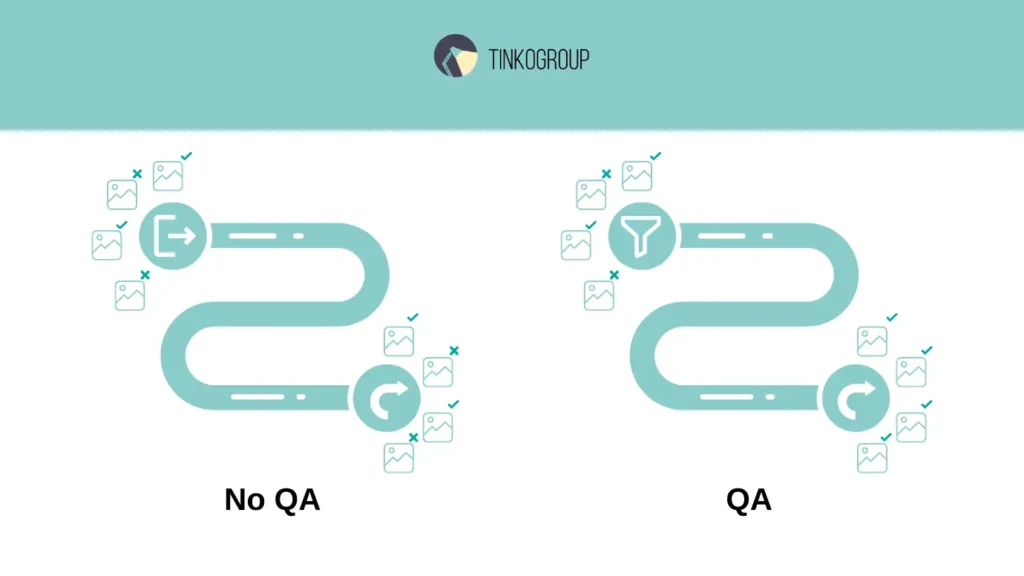

Missing Quality Assurance

Even with clear instructions and a carefully trained team of annotators, it’s impossible to completely avoid errors. Human error, fatigue, differences in interpretation, and technical glitches inevitably lead to errors creeping into the dataset. Therefore, any serious project requires a multi-layered quality assurance (QA) system.

Lack of QA Pipelines → AI Model Performance Issues

Lack of quality assurance directly leads to degraded results. Errors in annotations end up in training sets, and the model ends up learning from biases rather than facts. This causes a whole range of AI model performance issues:

- a drop in accuracy on real data;

- an increase in false positive and false negative predictions;

- model instability during production use;

- loss of trust from businesses and clients.

For example, in a medical image recognition project, QA was minimal. As a result, some images were incorrectly labeled: benign lesions were classified as malignant and vice versa. The model showed good results in tests, but in practice, the error rate was critical. After implementing multi-level QA, accuracy increased by more than 12%, and the risk of misdiagnosis decreased.

The Role of Multi-Step Validation

The best approach is a combined one. Automatic scripts check the data format and identify empty labels or duplicates. Peer review allows annotators to verify each other’s work. Expert verification ensures that the final data meets the project’s goals.

Such a system reduces errors by an order of magnitude and increases confidence in the results.

Not Measuring Accuracy Improvements

Even with a perfectly structured annotation process, you can’t rest on your laurels. Data quality isn’t static: it changes as the project scales, new annotators are added, instructions change, and automation is used. Therefore, without constant monitoring of labeling metrics, it’s impossible to maintain a high level of quality.

Why Failing to Track Progress Blocks Improving Data Labeling Accuracy

If a team doesn’t track progress, they don’t know whether the project is moving in the right direction. The situation can be deceptive: the number of labeled objects increases, deadlines are met, but the model doesn’t demonstrate the expected accuracy. This is often due to the fact that data quality has deteriorated without being noticed.

Without metrics, it’s impossible to objectively evaluate the effectiveness of changes. For example, implementing automatic pre-labeling can reduce processing time, but if no one measures the error rate, the resulting quality can plummet. Therefore, a lack of monitoring blocks improving data labeling accuracy and leaves the project vulnerable to hidden problems.

Key Metrics: IAA, Throughput, Error Rates, Time-to-Quality

To monitor the process, key quality and performance indicators are used:

- Inter-Annotator Agreement (IAA) is a measure of the consistency of annotations between annotators. A low IAA indicates that instructions are being interpreted differently, and the data is inconsistent. This is a signal that guidelines need to be updated or additional training is needed.

- Throughput is the speed of data processing. It’s not just the number of objects annotated per hour or day that’s important, but also the stability of this metric. Sharp fluctuations in throughput often indicate an overloaded team or a lack of understanding of the task.

- Error rates are the percentage of errors identified during the QA phase. Tracking this metric shows how well annotators adhere to instructions and how often automation makes mistakes.

- Time-to-quality is the time it takes for a project to achieve a predetermined level of accuracy. If this metric is too high, the processes are inefficient and the pipeline needs to be reconsidered.

Practical examples of metrics in action:

- In a computer vision project, the team noticed that the IAA had dropped below 70%. Analysis showed that some annotators were confusing the “bicycle” and “motorcycle” classes. After updating the instructions, consistency increased to 90%, and model accuracy improved by 8%.

- In a medical project, throughput was high, but error rates exceeded 20%. This meant that the annotators were rushing, sacrificing quality. After introducing additional control tasks, the error rate was halved.

- In a financial NLP project, the time-to-quality metric exceeded the planned deadline by twice as much. The team discovered that the instructions were too complex and required revision. After simplifying them, the process accelerated, and the resulting accuracy increased.

Measuring metrics alone is not enough. It is important to build a closed-loop improvement process:

- data collection — recording IAA, throughput, error rates, and other metrics;

- analysis — identifying bottlenecks, such as low consistency or increasing error rates;

- correction involves refining instructions, retraining annotators, and configuring automation;

- repeated measurement is a check to see if the intervention has yielded positive results.

This cycle allows for consistent data quality to be maintained throughout the project, not just at the start.

Conclusion

Annotation errors are a hidden but powerful factor reducing the effectiveness of AI projects. Data labeling mistakes lead to increased costs, delays, and low accuracy. However, all of these are controllable.

Clear instructions, annotator training, judicious use of automation, dataset diversification, compliance, QA implementation, and the use of metrics create a solid foundation for strong models.

Companies that invest in this process gain a competitive advantage, bringing products to market faster and achieving better accuracy.

Tinkogroup offers solutions that address all of these aspects. Our services are built on the principles of quality, security, and scalability. Learn more about our capabilities on the data annotation services page.

Why is data labeling considered the most critical part of AI development?

Research shows that up to 80% of project time is spent on data preparation. No matter how advanced your neural network architecture is, if the data is labeled incorrectly (“garbage in”), the model will produce poor results (“garbage out”).

Can I rely solely on automated data labeling tools to save time?

No, blind reliance on automation is risky. Studies show automated tools can have error rates as high as 30% in complex tasks. A Human-in-the-Loop approach, where humans verify and correct automated labels, is essential for ensuring accuracy and safety.

How do I know if my data labeling quality is improving?

You must track specific metrics. The most important ones are Inter-Annotator Agreement (to check consistency between workers), Error Rates (to ensure adherence to guidelines), and Throughput (to monitor speed without sacrificing quality).