Even the most experienced AI teams sometimes fail where they seem to have everything under control — at the model training stage. And we’re not talking about newbies, but about specialists who have worked on dozens of projects, know the ML stack by heart, but still encounter situations when the model “doesn’t learn properly.” To put it simply, model training is a process in which an algorithm “chews” data, identifies patterns, and forms internal rules in order to then make meaningful predictions or decisions based on new data.

In machine learning, this is similar to how a person learns: we read, try, make mistakes, try again — and gradually become better. The difference is that the model has no intuition, it completely depends on what data and how we give it.

So why can even strong AI teams stumble here?

There are many problems:

- pressure from the business to “bring the product into production faster”;

- lack of quality data;

- poorly structured machine learning workflow;

- training cycles that are too long without intermediate validation;

- underestimating the importance of testing and feedback.

The cost of error here is high. Bad AI model training practices lead to missed deadlines, budget overruns, and the emergence of models that look “smart” in demos, but in production conditions give chaotic and useless answers.

In my practice, there was a case when, due to hasty training of a model using reduced datasets, a company lost three months of development and several large clients because the product did not produce stable forecasts. The losses amounted to hundreds of thousands of dollars.

In this article, we will consider five key signs by which you can understand that your team needs to reconsider its approach to model training, and at the same time, we will figure out how to fix this without stopping work on the project.

Sign 1 — Inconsistent Model Performance

One of the first and most alarming signs of problems in model training is unstable model performance. This refers to a situation where quality metrics — such as accuracy, precision, recall, or F1-score — fluctuate significantly from run to run or under different operating conditions.

For example, a model on a test dataset shows 91% accuracy, but on real data — 76%. Or precision (the proportion of correctly identified positive examples) sometimes jumps to 0.88, then drops to 0.62. For businesses, this sounds like “today the model makes almost no mistakes, but tomorrow it produces inconsistent forecasts.”

What does this mean in practice:

- Managers lose confidence in the product and postpone the release.

- Key decisions are made based on unpredictable results.

- Departments that depend on the model (for example, marketing or risk management) are forced to restructure processes and allocate time for manual verification.

Often instability is a symptom of a deeper problem:

- weak representativeness of training data;

- overtraining on private patterns;

- too aggressive hyperparameter tuning;

- lack of system monitoring.

Tinkogroup Case Study

One of our clients, a fintech company forecasting customer solvency, faced the fact that the credit scoring model could change the accuracy level by ±12% over the course of a week depending on the day and time of day. We conducted a data quality in AI training audit, implemented a procedure for stable sampling of training data, as well as automatic verification of metrics at each stage of deployment. As a result, the indicators stabilized, and fluctuations did not exceed ±2%, which significantly increased management confidence and accelerated the implementation of the product into production.

Sign 2 — Long Training Cycles Without Clear Gains

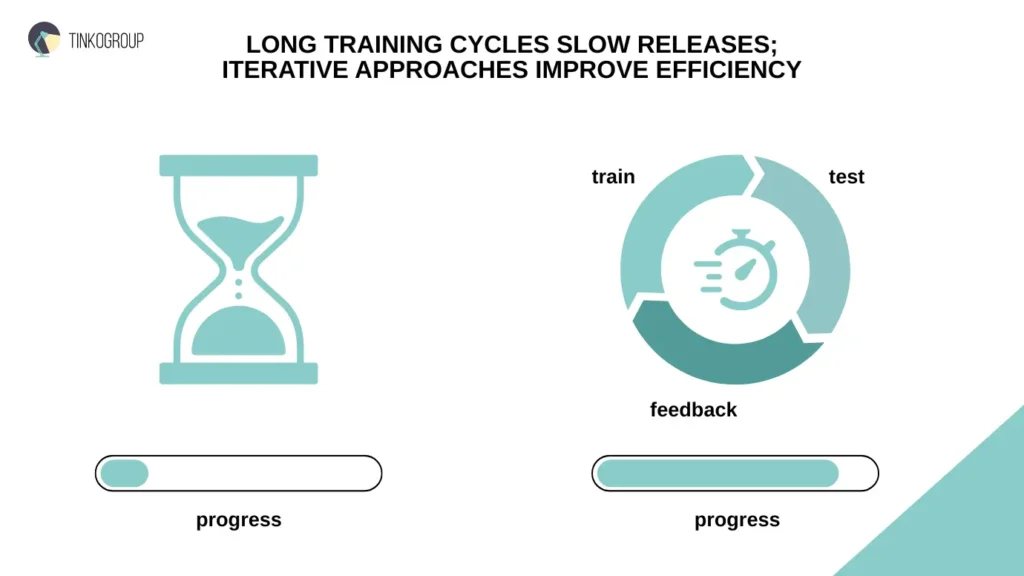

The second typical problem in AI model training is excessively long training cycles that do not bring noticeable improvement. It’s like if you raced a car on a race track for weeks, but never learned to take turns faster.

Why it’s dangerous for business:

- The launch of new features is delayed, and with it the product’s entry into the market.

- Competitors who have a faster machine learning workflow release updates earlier and take away audience share.

- The team spends time and computing resources on endless model runs without getting noticeable growth in metrics.

How Iterations Accelerate Improvements

Efficient ML development is short iterations: trained the model → tested → made changes → repeated. Such a cycle allows you to quickly identify which hypotheses work and which do not, and not get stuck in month-long training “marathons”. Here it is important not to confuse “more epochs” with “better results”: often after just a few checks you can see that the model has reached a plateau and further training gives minimal gains.

In many teams, these long cycles persist not because of model complexity, but because data preparation is repeated from scratch instead of using prepared AI datasets that enable faster prototyping and earlier performance validation.

Tools and approaches that really reduce training time:

- Distributed training — parallelization of calculations between several nodes or GPUs.

- Cloud ML — use of scalable cloud capacities to adjust resources to peak loads.

- Optimization techniques — advanced learning rate settings, early stopping, use of compressed data formats and batch training.

Case Study from Tinkogroup

For an e-commerce client who trained a recommendation model, the process took almost 36 hours. We optimized the pipeline: divided the training into several parallel streams, transferred calculations to the cloud and applied early stopping to avoid wasting resources on useless epochs. As a result, the cycle was reduced by 40% — from 36 to 21 hours — without loss of accuracy. Moreover, the time for testing hypotheses was reduced by almost half, which directly increased AI team productivity and allowed us to launch updates faster.

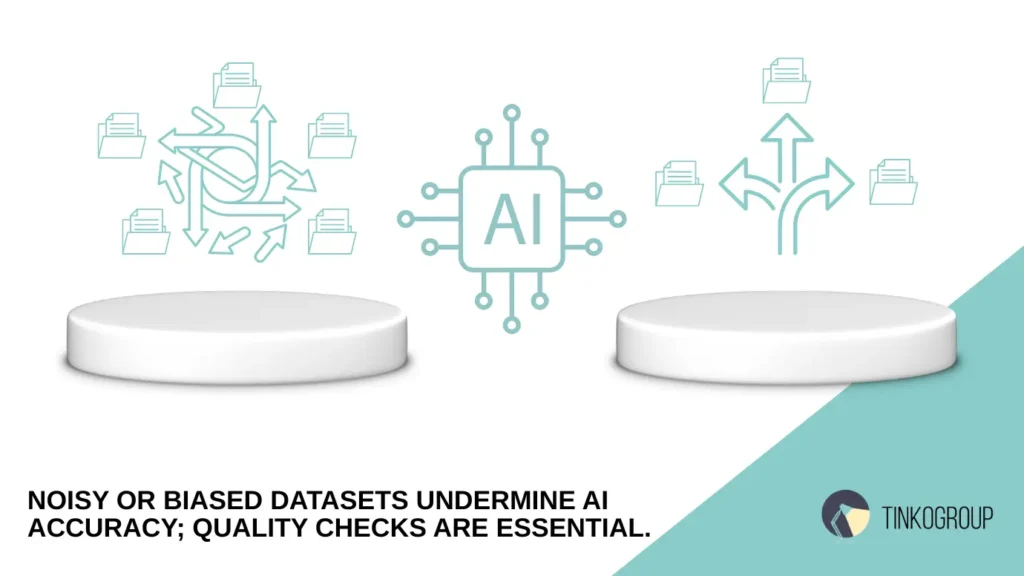

Sign 3 — Poor Data Quality Undermining Results

No matter how many resources you spend on AI model training, it can all be in vain if the input data is far from ideal. Data is the fuel for the model. If it is dirty, the engine will run intermittently or even stop altogether.

Why Data Quality Is Critical

In machine learning, even a small proportion of erroneous or unrepresentative examples can seriously distort the results. The model learns from what you give it, and cannot “guess” that some of the information is false or incomplete. In many real-world projects, this leads to dataset quality issues that quietly sabotage AI models long before deployment. The result is a model that does brilliantly in testing, but fails in production.

Typical data problems:

- Noisy data — noisy data with typos, incorrect values, unnecessary features.

- Bias — skewed data, when some classes or scenarios are represented more often than others, and the model begins to give them an unreasonable preference.

- Incomplete datasets — gaps in key features, lack of historical information or data on important categories.

How to Improve Data Quality

Improving data quality is not a one-time task, but an ongoing process built into the machine learning workflow. The earlier and more thoroughly you catch errors, noise, and biases, the fewer problems you will have during training and production. In this sense, proper data management is one of the most important elements of AI training best practices and the key to stable model results.

- EDA (Exploratory Data Analysis) — a detailed study of the structure and distribution of data to see anomalies and imbalances.

- Anomaly detection — automatic detection of outliers and suspicious values before the training stage.

- Labeling quality checks — systematic verification of the correctness of the labeling, including cross-validation between annotators and testing on small subsamples.

Case Study from Tinkogroup

We were approached by a media service whose model of a recommender system showed strange results: users were offered irrelevant films. After the audit, we found out that almost 20% of the records in the dataset had incorrect or incomplete genre labels. We implemented automated labeling verification and configured anomaly detection for the incoming data stream. After these changes, ACCURACY increased by 15%, and user complaints about irrelevant recommendations decreased almost by half. This is direct evidence that improving AI accuracy starts with working on data quality in AI training.

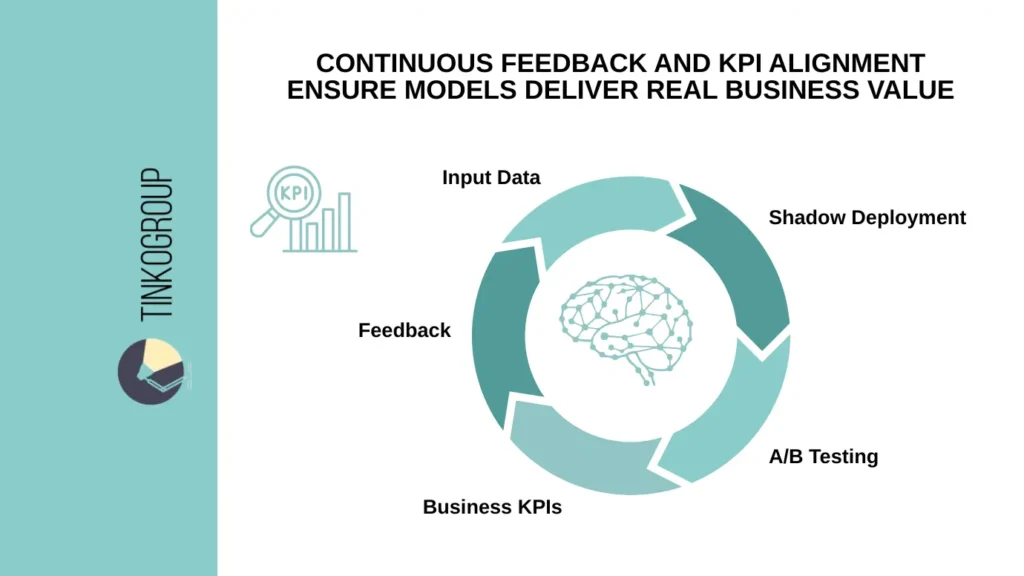

Sign 4 — Lack of Iterative Testing and Feedback Loops

Even the most advanced AI model training is meaningless if the model is not regularly tested in conditions as close to combat as possible. The principle here is: “What is not tested, breaks.”

Why Frequent Testing Is Critical for AI model optimization

In machine learning, data, conditions, and user scenarios are constantly changing. If you test a model once a quarter, it can accumulate dozens of small errors and degrade in performance. Iterative testing allows you to:

- quickly identify metric drops;

- test hypotheses without risk to the main system;

- faster adapt the model to new data.

What are Feedback Loops in ML

Feedback loop is a closed process, where the results of the model’s work affect its further training. For example, recommendations on the platform collect user clicks, these clicks form new training data, and the model is updated taking this data into account. Without such cycles, the model freezes and stops responding adequately to changes in the environment.

Production Testing Methods

Testing in production is not a risky game with users, but a strategic way to test a model in real conditions while maintaining control over the results. Such methods allow you to identify weak points and conduct AI model optimization without compromising key business processes.

- A/B testing — parallel launch of several versions of the model on different user groups to compare the results.

- Shadow deployment — a silent launch of a new version of the model that receives real data but does not affect the final answers. This allows you to evaluate its performance live without risking business processes.

Tinkogroup Case Study

A logistics client had a problem: the demand forecasting model was losing accuracy during peak seasons. We implemented an automated testing pipeline, including A/B tests and shadow deployments. Each model update is now tested on a test market segment and in shadow mode across the entire system. This reduced the number of failures by three times and accelerated the implementation of updates by 25%.

Reliable Data Services Delivered By Experts

We help you scale faster by doing the data work right - the first time

Sign 5 — Misalignment Between Business Goals and Model Outputs

Even a machine learning workflow that is perfect from an engineering perspective can fail if the model solves the “wrong” problem. When the technical team and the business speak different languages, metrics improve, but the real effect for users and the company remains zero.

How the Gap Arises

Often, engineers focus on improving metrics like accuracy or F1-score, without asking whether this will lead to increased sales, customer retention, or cost reduction. As a result, the model can demonstrate brilliant results in tests, but not bring business value.

Examples of Misalignment

The gap between the model metrics and real business results is often noticeable only after implementation. On paper, the indicators are excellent, but in reality, the effect is zero or even negative. Below are several typical scenarios when the model is formally successful, but does not bring value to the company.

- The model recognizes objects in photos with 98% accuracy, but the business needs to process orders faster, and this metric has not improved.

- The algorithm predicts demand with a low error, but the purchasing department still makes decisions manually, ignoring its recommendations.

How to Align Model Goals with Business KPIs

Even the most accurate model loses its value if its results do not affect the company’s key indicators. AI model training should be based on a clear answer to the question: how exactly will this model help achieve business goals? Aligning technical metrics with KPIs is a way to transform a model from a laboratory prototype into a growth tool. In many AI-driven products, this alignment also depends on how model outputs are delivered to end users.

This is where adaptive user interfaces that respond to context, behavior, and usage patterns play a critical role, ensuring predictions translate into real, measurable business impact rather than remaining abstract technical results.

- At the start of AI model training, determine which key performance indicators (KPIs) will measure success.

- Include business representatives in the team to jointly formulate requirements.

- Regularly compare the results of the model with business metrics: ROI, LTV, NPS, cost reduction.

Example from Tinkogroup’s Practice

One large retailer contacted us with a complaint: their recommendation system showed high metrics for precision and recall, but sales were not growing. We conducted an audit of machine learning workflow and found out that the model recommended products with a high probability of purchase, but low marginality. After revising the target functions, we added a profitability factor to the algorithm. The result is a 12% increase in ROI while maintaining the same accuracy indicators. This is an example of how AI training best practices help synchronize technical and business goals.

How to Implement AI Training Best Practices

Good AI model training is not a one-time algorithm tweak, but a whole system of processes that constantly adapts to new data, tasks, and market conditions. To minimize model performance issues and increase AI team productivity, companies must build a sustainable ecosystem in which technical work directly supports business goals.

- Continuous iteration. The model should be regularly reviewed and retrained as new data comes in. This not only improves AI accuracy, but also reduces the risk of degradation in production. Example: using automated pipelines that trigger retraining when a drop in key metrics is detected.

- Data quality management. Data quality determines the accuracy limit of any model. Data quality control in AI training includes source selection, anomaly monitoring, and regular label checks. The best teams use a combination of EDA, automatic validators, and manual inspections.

- Performance monitoring. Tracking not only accuracy, but also model performance in real time. This allows you to quickly respond to model performance issues caused by changes in user behavior or the emergence of new patterns in the data.

- Business alignment sessions. Regular meetings between ML engineers, product managers, and business analysts help synchronize goals. This approach ensures that the machine learning workflow remains focused on achieving KPIs, and not just improving abstract metrics.

- Cross-functional collaboration. A strong AI team works at the intersection of technology, analytics, and domain expertise. Involving experts from related departments (marketing, logistics, sales) allows you to see the problem from different angles and find more practical solutions.

Tinkogroup Case

A client from the e-commerce sector had personalization models that were losing efficiency every 3-4 months. We implemented a comprehensive AI training best practices system: automatic retraining every two weeks, real-time data quality control, and monthly business sessions on KPIs. The result was an 18% increase in conversion and a reduction in response time to a drop in metrics from weeks to several hours.

Conclusion

In today’s world, model training is not just a development stage, but a strategic asset for a company. Mistakes in training can be costly: slow releases, missed opportunities, decreased user confidence, and direct loss of profit. The signs we’ve looked at — from unstable model operation to non-compliance with business goals — signal that it’s time to reconsider approaches to AI model optimization.

By applying AI training best practices — constant iteration, data quality control, performance monitoring, synchronization with KPIs, and cross-functional interaction — you create a sustainable foundation for growth and competitive advantages.

Tinkogroup is a trusted partner for companies working with AI and data-driven technologies. We support businesses at different stages of their AI journey, helping them build reliable processes and long-term solutions that scale.

Get in touch with our team to see how high-quality data services can support your AI and machine learning initiatives.

Why does an AI model perform well in testing but fail in production?

This usually happens due to poor data quality, lack of representative training data, overfitting, or missing feedback loops. Models may show high accuracy in controlled environments but break down in real-world conditions if they are not regularly tested, monitored, and retrained on fresh data.

How can teams reduce long model training cycles without losing accuracy?

The most effective approach is short, iterative training cycles with frequent validation. Techniques such as early stopping, distributed training, cloud-based scaling, and automated evaluation help teams avoid wasting resources on ineffective epochs while accelerating experimentation and deployment.

How do you align AI model training with real business goals?

Alignment starts by defining business KPIs (ROI, conversion rate, cost reduction) before training begins. Models should be evaluated not only on technical metrics like accuracy or F1-score but also on their impact on business outcomes, with regular feedback loops between ML teams and stakeholders.